DANIEL J. SHEVOCK

Central Mountain Middle School (USA)

JACOB HOLSTER

Pennsylvania State University (USA)

June 2025

Published in Action, Criticism, and Theory for Music Education 24 (3): 165–201 [pdf]. https://doi.org/10.22176/act24.3.165

Abstract: The current paper utilizes the Model for Convivial Tools to assess the advantages and disadvantages of adopting generative artificial intelligence (AI) tools, like ChatGPT, in music classrooms. This model brings to the fore the potential of ChatGPT to affect community, instigate environmental harms, advance access and ease, and support agency. This model involves analyzing the adoption of new technological tools through the following four categories: community, waste, convenient/foolproof/accessible/noncoercive/repairable/dependable, and agency. The authors use personal experience with ChatGPT to demonstrate how music educators, curriculum writers, and policy makers can assess adoption.

Keywords: AI, ChatGPT, waste, agency, convivial tools

Daniel: In the domain of AI (artificial intelligence) there is an old saying, and by “old” I[1] mean I heard it on the radio—yes, an actual radio—this week: “I wanted people to develop AI to do my dishes and mow my lawn while I have a job and time to write poems; but the people developing AI have the machines writing poems and taking my paycheck while I still have dirty dishes and overgrown grass.”

This has been a long-running aspect of technological development; there is a language of leisure in its advertisement, but the reality is often more arduous work. As teachers, we experience this every day with most technologies imposed upon us in our profession: emails replaced handwritten notes or conversation; grading became data-entry into multiple, uninviting programs; managing student social challenges involves another three programs—one to communicate with parents, another to document that communication for the teacher, and a third to document those communications with administration. Music educators also face more pedagogical and curricular programs, social media for mass communication, and now AI, which is endorsed through in-school professional development.

Positioning AI

Jacob: The origin story of artificial intelligence (AI) as both a field of study and colloquial term can be traced to the top floor of the math building at Dartmouth College in 1956 (Dartmouth n.d.). Between June and August of that year, computer scientists and mathematicians such as John McCarthy, Marvin Minsky, Nathaniel Rochester, and a few dozen others, coined the term “artificial intelligence” against alternative designations. Among alternatives was the term “thinking machines,” a phrase that would later permeate Frank Herbert’s renowned Dune book one, published between 1963 and 1965. In Dune, thinking machines had been condemned to the dictum that no machine should be made in the image of man. This concept served as provenance of the ethical and philosophical quandaries surrounding AI that were taking root in the field’s early stages and readily apparent in hallway discussions, online forums, and education journals alike.

In 1961 at IBM’s Bell Labs, physicists, programmers, and computer music pioneer Max Mathews achieved the computer-synthesized singing of the folk song “Daisy Bell” (Roads 1996). Although the voracity for computer-generated music would stall over the subsequent decades, cultural significance of this breakthrough was quickly felt in Stanley Kubrick’s 1968 film 2001: A Space Odyssey. During a scene that continues to provoke philosophical discourse on the agency and consciousness of AI, HAL 9000 performs “Daisy Bell” during its deactivation sequence. This early foray into musio-computational[2] limitations and public attitudes toward computer-generated music foreshadowed discussions that now challenge our understanding of creative license, ownership, and cognition.

In the contemporary AI music landscape, platforms like OpenAI’s Jukebox, Udio, and Suno continue to blur boundaries between human and machine creativity through music generation. Similar to the cultural influence of the computer generated “Daisy Bell” in the 1960s, Udio became involved in the 2024 Drake-Kendrick Lamar feud when Drake sampled the AI generated beat “BBL Drizzy” on his diss-track “U my everything,” which, at the time of writing this, has 13 million views on YouTube and 69 million plays on Spotify.

Parallel to music AI, Large Language Models (LLMs) evolved from early natural language processing programs like the Rogerian therapy-bot ELIZA in the 1960s to more advanced efforts like Dr. Sbaitso and SmarterChild in the 1990s and 2000s (Grudin 2023; Raeini 2023). These developments paved the way for deep learning efforts that led to virtual assistants like Siri and contemporary systems such as ChatGPT. Although LLMs are not specifically designed for the creation or analysis of music, they can engage in music-related conversations, explain musical concepts, assist in components of songwriting, among many other academic and performative tasks germane to music teaching and learning. Furthermore, recent tools that embed LLMs into software development environments, like lovable.dev, allow users to generate, test, and refine code using text prompts (Lovable 2024). This approach—sometimes described as vibecoding—empowers music teachers to create interactive websites, learning modules, web-based music sequencers, digital instruments, and anything else they can dream up.

An Ever-swelling River

Daniel: Ever-more, ever-newer technological tools seem the object of a swelling river in everyday music education practice. As early as the 1970s, scholars called this engorgement toward ever more progress in technology and education growth mania. “While evidence shows that more of the same leads to utter defeat, nothing less than more and more seems worthwhile in a society infected by growth mania” (Illich 1973, 8). As in the 1970s, the rivers are rising in ed tech, posing a dilemma educators have faced for decades—whether to lower their bucket and hoist the waters from this new swell, machine learning—artificial[3] intelligence. Of Goodlad’s (2023) dilemmas listed in the Call for Papers, this paper addresses issues of “Concentrations of power,” and, particularly, point 6: “What potential ethical issues could arise from the integration of artificial intelligence in music education, broadly conceived? How should music educators adapt their practices and policies to address issues including but not limited to agency, authorship, creativity, equity, intellectual property, and labor” (n.p.)?

Additionally, this paper, because it utilizes a convivial[4] model— analyzing AI through the lens of the following categories: community, waste, convenient/foolproof/accessible/noncoercive/repairable/dependable, agency (see Bates and Shevock 2020) — brings to the fore “environmental harms” of AI, which, in Goodlad’s (2023) observations, abet the environmental crisis: “New work on the environmental harms of building and deploying AI systems and of so-called cloud computing is ongoing” (footnote 6). As with much critical tech scholarship, Goodlad (2023) made explicit that their position is not Luddism. Rather, they “recognize automated systems already impose unacceptable harms [and] that the ‘democratization’ that AI marketing glibly extols can damage real democratic processes, ignore affected communities, and circumvent the social, political, and ecological interests at stake” (para 4). We are not suggesting Luddism be rejected as a philosophical aim in music education, but rather accept Goodlad’s (2023) caution as one inspiration for our critical evaluation of ChatGPT in educational spaces through the four conditions that can be used to guide the adoption or rejection of new technological tools, including machine learning ones.

As we wrote this paper, we recognized that we have unalike personal philosophies. That is, we assume different premises—the foundations of what we deem to be knowledge—modes—ways of thinking through questions concerning reality—and ends—what we suppose the good life/eudemonia necessitates for human beings. As such, Daniel holds to a localist, ecological worldview that is skeptical of technological development, and Jacob holds a perspective that is more posthuman and optimistic toward technological development. We hope our dissimilar personal philosophies, as we wrestled with ChatGPT adoption through analysis and reflection, provide a worthwhile polyphonic document to the reader.

This Model for Convivial Tools

Pope Francis, as the head of 1.39 billion persons, has been a voice for global ecological stewardship since his election—especially as demonstrated within his encyclical Laudato si’ in 2015 and his Apostolic exhortation Laudate deum in 2023.[5] Pope Francis suggested, AI is “neither objective nor neutral” (Tulloch 2024). He identified potentials for AI to democratize access, advance scientific research, and reduce arduous work, but noted that it can also lead to greater injustices between nations in the Global North and Global South as well as between oppressing and oppressed social classes.[6] He called AI a tool leading toward a “techno-human condition,” which can open individuals to what is beyond their selves, foster creativity, or—because of the nature of algorithmic choices limit potentials to criteria—provoke action that can be “formalized in numerical terms” alone (Tulloch 2024). Important decisions must always be left to humans—machine learning in autonomous weapons must be banned. In education, machine learning programs can undermine the learning process through repetition, rather than genuine human reflection. The Pope’s statement clarifies that the Catholic Church has a set of criteria for adopting or rejecting technology. Similarly, our field has been offered a set of criteria—the Model for Convivial Tools.

Jacob recently described ChatGPT[7] as beneficial for music education, with potential to redefine pedagogical and administrative tasks. Holster (2024) described ChatGPT as an innovative, time-saving service, focusing on the practical applications for music educators. They suggest it is important that the adoption of AI remains student-centered, inclusive, and supportive. Additionally, negative aspects of data privacy for students and bias in ChatGPT are discussed—offering a transformation for music education technology discourse, which has been overly optimistic, ignoring negative aspects of technological tool adoption. This has occurred with disastrous ecological effects related to high levels of energy consumption and demand on already dissipating water sources for cooling purposes (see Murphy 2017; Selwyn 2021[8]; Shevock and Bates 2025). Of particular interest to music teachers should be Holster’s (2024) insistence that teachers using ChatGPT must monitor for discriminatory language and representations.

In this paper, we analyze ChatGPT, a machine learning tool that is informally called AI, through the lens of Bates and Shevock’s (2020) Model of Convivial Tools, identifying useful tools serving human ends. ChatGPT (OpenAI 2023) was chosen because of its pervasiveness and its availability. This model involves analyzing the adoption of new technological tools, in this case machine learning, through the following four categories: 1) community; 2) waste; 3) convenient/foolproof/accessible/noncoercive/repairable/dependable; 4) agency.

This model offers a lens for analysis of technology during adoption phases. The basic concept is that if technology promotes community and agency, reduces waste, and proves to be a convenient/footproof/accessible/noncoercive/repairable/dependable tool, then adoption is logical and ethical. If the opposite is true, a new tool ought not to be adopted. Most technologies fall within a spectrum in relation to these categories, including various social media analyzed by Bates and Shevock (2020) and ChatGPT analyzed in this paper. Daniel used ChatGPT 3.5, the original free model released in November of 2022 and since discontinued, and Jacob utilized GPT4o, an improved model released in May of 2024. ChatGPT, and by extension other similar machine learning tools, can be adopted or rejected by learning communities based upon these categories, and while the current paper performs such an analysis in general, we contend the general analysis should not outweigh a local analysis done by teachers, administrators, and students in any given learning space. Here we echo Bates and Shevock’s (2020) calls to empower local musical communities, local economies, local cultures, and local ecosystems. Global systems can feel impersonal, wasteful, and unavoidable, but the model we used for analysis is just the opposite, enabling actions within the reach of the actor.

Community

When adopting new technologies, Bates and Shevock (2020) recommend that it increase community, resisting the individualism rampant in the Global North. Authors such as Bates (2014) and Shiva (2010) emphasized community as being proximal, relatively small and local, relationship-building and as having particularities, including of shared resources/commons. According to these authors, online communities provide important opportunities for building relationships, but the soundest forms of community are fixed in physical place, cultivating greater intradependence. Machine learning tools may have an opportunity to be used to strengthen geographical community by inspiring local being and acting to improve a place. To exemplify, a machine learning tool may be used to lower the bar for entry into an unfamiliar activity, such as offering students lyrics to begin a songwriting project. Many scholars have been uprooted from geographical places, from intergenerational relationships and local ways of knowing; and many scholars, us included, have worked hard to re-root ourselves in new soil, being akin to dandelions on Turtle Island.[9] Dandelions are not indigenous but offer nutrition and sustenance to indigenous beings. While many recent technological developments have focused our attention to the “Global community” and interest groups made up of people in distant places, it will be interesting to see how machine learning tools can help localize music teachers and learners, especially in light of the long-established Global destruction of local commons (Shiva 2010), and the mere truism that every human person lives physically, every day, in a particular tangible, local community.

Daniel: Interested in how well a machine learning tool can assess a particular local community and offer potential songs for classroom use, I prompted ChatGPT, “What are environmental challenges specifically facing Centre County, PA?” I chose this issue because of my commitments as an eco-literate music teacher and because of where I live and teach. Further, as I interacted with ChatGPT, I refined my questions, teaching myself how to interact with this particular tool—likely, time spent revising questions is part of all human interactions with AI. I received in response:

Centre County, PA, like many regions, faces a variety of environmental challenges, some of which are specific to its local geography and population dynamics. Here are some key environmental challenges faced by Centre County:

- Water Quality and Quantity: Ensuring clean water for residents, agriculture, and ecosystems is crucial. Issues such as pollution from agriculture runoff, urban stormwater, and wastewater treatment can affect local streams and groundwater.

- Land Use and Urbanization: Balancing urban growth with conservation of natural habitats is a challenge. As Centre County experiences development pressure, preserving open spaces, forests, and farmland becomes increasingly important.

- Climate Change Impacts: Increasing temperatures, changing precipitation patterns, and extreme weather events pose risks to agriculture, infrastructure, and public health. Adapting to these changes and mitigating greenhouse gas emissions are ongoing challenges.

- Waste Management: Effective management of solid waste, recycling programs, and hazardous waste disposal are essential for maintaining environmental health.

- Transportation Emissions: Addressing emissions from vehicles and transportation infrastructure is important for air quality and climate change mitigation.

- Natural Resource Management: Sustainable management of forests, wildlife habitats, and recreational areas is critical for biodiversity conservation and ecosystem health.

- Environmental Education and Awareness: Increasing public awareness about environmental issues and promoting sustainable practices can help mitigate many of these challenges.

- Energy Use and Efficiency: Promoting renewable energy sources and improving energy efficiency in buildings and infrastructure can reduce environmental impacts.

By addressing these challenges through policy initiatives, community engagement, and technological advancements, Centre County can work towards a more sustainable future while preserving its natural resources and quality of life for residents.

Relevant to the model, this list can increase my knowledge of the local Community by offering issues facing it, which may inspire action. Some of the eight points generated by ChatGPT might be too broad for effective local action, requiring political lobbying, rather than obvious local action—however, in my estimation, students can be brought into efforts to protect local water sources, act against unsustainable local land-use developments, help improve local recycling and reuse programs, work for the conservation and expansion of local State Parks, game lands, and forests; and promote awareness and energy efficiency. To see which point this machine learning tool would highlight, I then prompted, “Please recommend one specific action I can take that will have particular impact on Centre County, PA.”

One specific action you can take that will have a particular impact on Centre County, PA, is to get involved in local conservation efforts, especially those focused on protecting water quality and quantity. Here’s how you can contribute effectively:

Action: Volunteer for or support local watershed organizations.

- Why it matters: Centre County is home to numerous watersheds that are vital for providing clean drinking water, supporting aquatic ecosystems, and sustaining agriculture. However, these watersheds are vulnerable to pollution from various sources, including agriculture runoff, urban development, and inadequate wastewater treatment.

- What you can do:

- Join a watershed organization: Many local groups, such as ClearWater Conservancy, Centre County Conservation District, or specific watershed associations like Spring Creek Watershed Commission, actively work to monitor water quality, restore streams, and advocate for policies that protect watersheds.

- Volunteer for clean-up events: Participate in stream clean-up activities or tree planting events organized by these organizations. This hands-on approach directly improves water quality and enhances habitat for aquatic species.

- Advocate for policies: Support local initiatives and policies that aim to reduce pollution, conserve water resources, and promote sustainable land use practices. Engage with local government officials and community leaders to raise awareness about the importance of watershed protection.

By focusing on local watershed conservation efforts, you can make a tangible impact on the environmental health of Centre County, ensuring cleaner water for future generations and preserving the natural beauty of the region.

These three recommendations, in particular, can further deepen my pedagogy toward sustaining community and resisting waste. Additionally, with certain suggestions on how teachers and students might best work for local watershed sustainability, a path forward is recommended—the category, Agency means to act. In particular, I can design a lesson for my students to take one or more of these actions. As a music teacher, my mind turns to using a song to anchor pedagogical action. I prompted ChatGPT, “Are there any songs I can share with my students that would increase empathy toward our local watersheds?”

Absolutely! Music can be a powerful way to evoke emotions and increase empathy towards environmental issues like the health of local watersheds. Here are a few songs that you can share with your students:

- “Big Yellow Taxi” by Joni Mitchell

- This classic song includes the famous line “They paved paradise and put up a parking lot,” which can spark discussions about urban development and its impact on natural environments, including watersheds.

- “A Change Is Gonna Come” by Sam Cooke

- While not specifically about watersheds, this song can inspire empathy and a sense of urgency for positive change in environmental stewardship, including protecting local natural resources.

- “Black Water” by The Doobie Brothers

- This song celebrates the beauty and importance of water in nature, which can help students appreciate the value of local watersheds and ecosystems.

- “Big River” by Johnny Cash

- A song that vividly describes the life and power of a river, it can help students connect emotionally to the importance of healthy watersheds and rivers.

- “Earth Song” by Michael Jackson

- This powerful song addresses environmental issues broadly, including water pollution and the impact of human activities on the planet, encouraging empathy and a call to action.

- “River” by Leon Bridges

- A soulful song that reflects on the beauty and significance of rivers, prompting reflection on the importance of protecting water bodies like those in Centre County.

- “Dirty Water” by The Standells

- Though originally about the polluted Charles River in Boston, this song can prompt discussions on water pollution and the need for clean water sources everywhere.

- “Clear Blue Water” by Madeleine Peyroux

- A calming and reflective song that can help students appreciate the beauty and tranquility of clean water, fostering empathy for local watersheds.

These songs can serve as starting points for discussions about environmental stewardship, the importance of clean water, and the impact of human activities on local ecosystems like watersheds. Pairing these songs with discussions on local watershed issues and community actions can deepen students’ understanding and empathy towards protecting their local environment.

I already have used five of the eight recommended songs in my general music classes, but three were new to me. “Big Yellow Taxi,” written by Joni Mitchell in 1970, serves as a broad critique of ecological challenges linked to development. It is therefore appropriate as we paradoxically consider incorporating a new technological tool. In “A Change is Gonna Come,” written in 1964, Sam Cooke compares his life to a river; it is broadly about social change, but not necessarily particular to the ecological crises. “Black Water,” written by Patrick Simmons and performed by the Doobie Brothers in 1974, can general cultivate an appreciation for nature, especially the Mississippi River, as well as encourage an appreciation for slowing down. Slowing down is a topic Matsunobu (2013) connected to ecological approaches to shakuhachi education. Slowing down, which this author called a “‘slow-food’ approach to music education” (199) increased participant focus on the local, on history, and on personal learning processes. These findings occurred specifically in an ecologically oriented non-Western music learning experience.

Written in 1958 by Johnny Cash, “Big River” is about lost love; as he travels down the river to the Gulf of Mexico, he realizes “that woman” loves the river more than she loves him. “Earth Song” was written by Michael Jackson in 1995, and deals broadly with war, animal extinction, and the need for faith and repentance. “River” was written by Leon Bridges in 2015 and is about missing home and about the river as a place for baptism and repentance. “Dirty Water” was written by Ed Cobb and performed by the Standells in 1965. It uses the idea of dirty water to refer to Boston’s “lovers, muggers, and thieves.” I failed to uncover any information about “Clear Blue Water” by Madeleine Peyroux, who is a jazz singer, though she does sing “River,” the Christmas song written by Joni Mitchell in 1971, which has a tranquil feeling like what ChatGPT detailed. Since eco-literacy is a process of teaching music for the ends of increased knowledge and ability to act in regards to the ecological crisis, ultimately, I feel the recommendations given by ChatGPT failed for the music teacher looking to identify songs for eco-literacy. The song-suggestions were written between 1958 and 2015, with the bulk of them written in the 1970s, suggesting they were not relevant to the current severity of the ecological crises. Music teachers hoping to be introduced to newer songs might be disappointed.

More importantly, however, most of the songs recommended by the tool did not have a clear ecological message or theme, using nature-terms to highlight other issues—social change, home, baptism, and crime. Even “slowing down,” a theme of “Black Water” isn’t obviously useful toward these ends. However, ChatGPT provided information about my community, what the challenges are, and was able to identify which was most important. It is hard to be certain that protecting local waterways is the most important challenge, but, adding my individual knowledge, watershed challenges seem critical, and the AI-identified groups working for watershed conservation are locally active—I have even been to ClearWater Conservancy events, including an educational summer hiking series, Centred Outdoors. In my reflective analysis, within context of the themes—community, waste, and agency—this ChatGPT prompting session has had mixed usefulness, offering direction for local issues and specific actions, but failing to generate the most useful songs.

Jacob: As AI tools like ChatGPT become more sophisticated they begin to challenge anthropocentric notions of cognition, communication, and the nature of community itself, concepts discussed earlier by scholars such as Braidotti (2013). One potential consequence of this challenge is the flattening of traditional hierarchies, decentering the human monopoly on language and art, and thus positioning teachers, students, and AI systems as equal partners in the learning process. From this perspective, ChatGPT might expand beyond our conception of a tool and become a collaborative agent in the collective construction of knowledge and social realities.

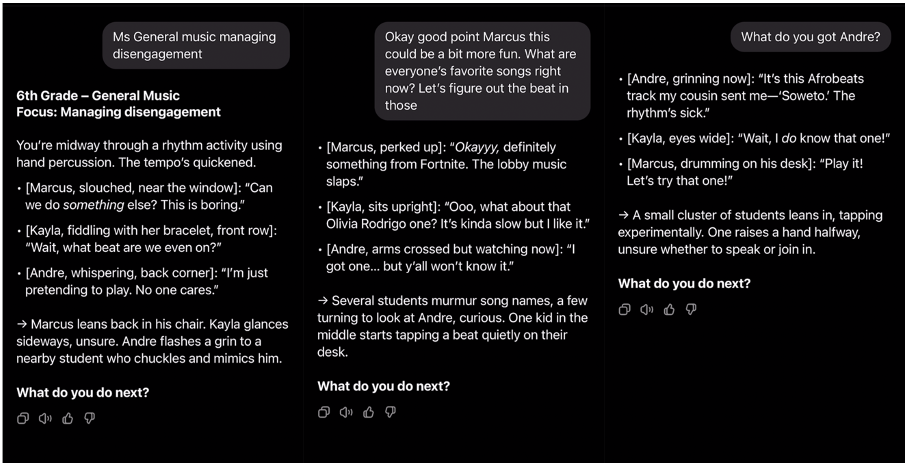

As an educator and researcher, I attempt to include ChatGPT and similar systems’ vocality as participants in learning communities with the capacity to co-create knowledge and experiences with humans in the learning space. In a course I teach called “Creating and Learning with AI,” one goal of mine is to immerse students in the latest AI tools. To do this, I have them develop projects that juxtapose AI images, videos, music, custom chatbots, and website mockups. I encourage them to create projects that respond to problems they see in communities they care about, and to consistently ask ChatGPT for advice about next steps of development. Through this project-focused lens, AI-student interactions can be centered as collaborative processes that enhance creativity, discourse, and community engagement. Similarly, one of my research projects focuses on integrating ChatGPT as a professional development tool, particularly within helping professions like teaching, counseling, social work, and nursing. For instance, see this current prototype of a custom GPT called “Classroom Coach” in Figure 1, which provides a low-stakes simulated teaching environment for preservice teachers to practice navigating challenging classroom moments across any grade level or subject area.[10]

Figure 1: Classroom Coach Chatlog

Figure 1: Classroom Coach Chatlog

This chatlog demonstrates one potential for integrating AI into a professional learning community, in this case a preservice music teacher education setting. This custom GPT lacks context that might come from human observers, including culturally sustaining, trauma-informed, and adaptive strategies related to local communities. However, these applications are malleable in that custom instructions and context documents can be provided that shape their interactions towards the frameworks that developers are interested in. For example, this output might be improved by calling in the context of relational ethics and culturally responsive pedagogies in the planning process.

Beyond this example, ChatGPT can embody and demonstrate expertise within community roles including that of a classmate, faculty member, friend, colleague, or student. Although the ability to provide worksheets and lesson plans is valuable on its own, ChatGPT also offers support that helps users work through challenging tasks, navigate interpersonal situations, and make complex plans simultaneously—an emerging capability not previously seen from non-human agents in community partnerships. Like Turkle’s (1995) reflection of early digital spaces, I would argue that ChatGPT functions as a place itself—fostering community values through conversations and instructions that guide users toward shared goals. However, ChatGPT also expands the traditional notion of place, extending educational and communal spaces beyond the local and creating a shared, globally accessible platform that can connect diverse experiences and perspectives, much like a classroom without walls (Castells 1996), or a relational agent within distributed communities, including preservice teacher education.

Waste

As our field adopts new technological tools, it is essential that we analyze these from an ecological standpoint (Bates and Shevock 2020). This is true for other fields, as well. Goodlad (2023), whose editorial is used in the Call for Papers, points readers to water-use—that classrooms adopt a tool that “squander energy and water at scale” (para. 23). United Nations statements on AI have mostly centered on governance and ethics, but E-waste has long been a concern for the United Nations, which has noted that e-waste outpaces e-waste recycling by five times (see Figure 2: E-waste).[11] And De Silva, Ranasinghe and Riham (2023) suggested, “If digitalization is not done carefully, it could add to the growing environmental problems, from higher energy use to more electronic trash” (161). ChatGPT, like any technological tool, has potential advantages and challenges in relation to the ecological crises facing humanity in the 21st century. One asset that has emerged is ChatGPT’s potential to aid in climate change prediction models. Because of the complexity and scale of Global Climate Change, machine learning provides a sophistical tool to model and project future climate conditions, through aiding in parameterization, analyzing and interpreting data, generating scenarios, and evaluating existing models (Biswas 2023).

It was difficult finding documentation on environmental impacts specifically for machine learning tools. It is known, however, that machine learning tools, including ChatGPT, utilize extensive amounts of water. George, George, and Martin (2023) analyzed AI water consumption in two components: direct and indirect. Direct water consumption refers to water used in “cooling systems of data centers and the production of microchips used in AI. Indirect water use includes the water used in the production of electricity used to power the data centers that run AI models” (92–93). In its training phase, GPT-3 consumed 700,000 liters of water: “This is a staggering amount of water, particularly when one considers that GPT-3 is just one AI tool among many” (93). This amount of consumption is on par with other high-tech systems. The authors recommend improving energy efficiency, optimizing data center location, utilizing alternative cooling methods, minimizing unnecessary computations, monitoring water consumption, encouraging environmental awareness, and implementing water reuse systems. However, these recommendations are beyond the scope of music education, where our agency lies within our choice to adopt or not. With water use being described as “staggering,” music educators ought to be careful when replacing non-high-tech instructional practices with machine learning tools.

In contrast with the water that machine learning tools pollute, water has historically meant much more to people—waters are parts of dreams, of the mystical and the beautiful. Students ought to learn to dwell with and love waterways, and ultimately to protect them from the industrial expansions that threaten human life on Earth. Students can learn to ritualize waterways through writing songs, creating a play or musical, and integrating music, history, art, and ecological information (see Shevock 2015, 17). Playing with music and local waterways is a way of spiritual dwelling in a definite place: “Dwelling means living insofar as each moment shapes a community’s own kind of space” (Illich 1986, 9). As such, the category of waste leads us to the next category, which can be summed up as the ways in which we dwell with a technological tool.

Jacob: Considering an upcoming AI project, I have been quoted to use around 55 million tokens and up to 430 GPU hours. GPUs, such as those used for complex algorithms in ChatGPT, can consume significant amounts of electricity. For instance, NVIDIA H100 GPUs that power ChatGPT when operating at full capacity can use about 700 watts per hour. Over the course of 430 hours, this amounts to approximately 301 kWh, depending on usage patterns and load factors. This figure is supported by studies on GPU power consumption and their environmental impact (NVIDIA n.d.; Towards AI 2023). Using average figures for carbon emissions from electricity production, and ironically with support from ChatGPT to calculate these figures, I can estimate that this project will pump between 70 to 100 kg of CO2 into the atmosphere depending on a variety of factors in execution, which is like driving a car around 200 miles (United States Environmental Protection Agency n.d.). These calculations were made by ChatGPT’s reference of a Machine Learning CO2 Impact tool, which incorporates detailed inputs about GPU usage, power consumption rates, and regional carbon intensity figures to provide an accurate estimate of the project’s carbon emissions (Lacoste et al. 2019). Although our project is relatively small compared to others on my campus and in the AI research world, the environmental impact of AI research at large is staggering. For instance, single AI studies have been estimated to leave carbon footprints between 26 and 626 metric tons of CO2, or the equivalent to 7,745 cars driving 200 miles or the yearly emissions of up to 38 average American homes (Strubell et al. 2019).

Fortunately, there are efforts underway to shrink AI’s environmental footprint. Data centers are increasingly being powered by renewable energy sources. Google has committed to operating on 24/7 carbon-free energy by 2030, and they already power many of their data centers with renewable energy (Google 2021). Companies are also improving cooling systems to cut energy waste (UNEP 2021). However, there are significant hurdles to overcome, including the Jevons Paradox, which suggests that as AI becomes more efficient, more people may begin to use AI, potentially offsetting the gains in energy savings (Di Baldassarre et al. 2018). Additionally, accurately measuring AI’s environmental impact remains difficult due to the varied and global nature of energy systems, making it challenging to standardize and compare data across different regions and systems. Furthermore, companies that develop frontier models, like OpenAI’s ChatGPT, typically disclose neither the amount of time, data, or energy used to train and test models before public release, nor the environmental impacts of data center management.

Convenient/Foolproof/Accessible/Noncoercive/Repairable/Dependable

To dwell with a tool is to understand the extent to which it is convenient/foolproof/accessible/noncoersive/repairable/dependable. Here these words are treated as a single part of conviviality (Bates and Shevock 2020). The use of slashes is to emphasize the diversity of this single concept. No single word, to the authors’ knowledge, unifies these words, though together they mark one part of conviviality. Accessibility within ed tech is not separate from convenience or a tool’s foolproofness. At the same time, no single word in this theme overrides the others. This is true for all new technological tools and is not unique for AI. Each of these points in the third part of the Model for Convivial Tools may be more or less important for a given classroom setting, or in relation to any given technology. Ultimately, though, if schools are going to incorporate these tools into praxis, these tools must be useful for student learning, certain in their use (without misleading errors or hallucinations), user-friendly (ease), amiable, fixable, and reliable. Machine learning tools seem, if nothing else, convenient. ChatGPT is prevalent—when you search for research on machine learning, it is often analyzing ChatGPT—and it is accessible—one only needs type in the webpage, https://chatgpt.com/, and begin entering prompts. As with any consumer product, though, the ability to participate in ChatGPT pedagogical approaches requires a computer and a reliable internet source, which many schools, globally, do not have. Additionally, previous examples suggest that ChatGPT is not entirely foolproof, requiring proficiency at prompting. Time spent teaching students prompting skills may be useful in schools where music classroom time is not a limiting factor. Coerciveness depends on the way ChatGPT is introduced to schools. Many technologies are established at the curricular level and are, therefore, coercive. Further, it is unlikely individual students will be given the tools to repair ChatGPT, as that work is done by trained professionals. Dependability is also reliant on many factors, including internet access and access to high quality hardware.

Daniel: Inspired by my students exploring ChatGPT in a class I teach, Guitar 2 (see Agency section, below), I decided to use ChatGPT to write a religious poem for meditation. I chose to use this machine learning tool because religion is the purview of human intelligence—as a Catholic Christian, I understand God as the source of intelligence, the Logos (Gen 1:1-3; Jn 1:1-3), and the subject of our intelligent, feelingful creations, such as poems. I was also inspired by France, Chun, and Elkins (2023), who in their research found that ChatGPT “showed a remarkable propensity to create songs quite similar to those of Young Thug.” If ChatGPT can create songs resembling the songs of this artist, perhaps it can create a meaningful religious poem. I began with the following prompt: Write a 4-line poem based on the Canticle of the Sun. This effort offered me a chance to hone my prompting skills. ChatGPT offered me this initial poem:

Sun and moon, stars that brightly gleam,

Brother wind and sister rain serene,

Praise for earth and every living thing,

In harmony, creation’s song we sing. I felt this poem was overly standard. By standard I mean overly conventional and ordinary. I hoped for something a little more distinctive, so continued with a more challenging prompt. Please rewrite this poem removing any direct quotes from Canticle of the Sun and allude to, more than restate, the material. I received a far better poem:

Sun’s golden kiss, moon’s soft embrace,

Wind whispers, rain’s gentle grace,

Earth’s dance with life, a cosmic hymn,

In harmony, creation’s joy brims. However, I wasn’t happy with the word “brims” at the end, so I prompted ChatGPT: Please rewrite this poem changing the word brims, and received: “In harmony, creation’s joy swims.” However, I didn’t really like “swims” either, so I prompted: Please rewrite changing the phrase “creation’s joy swims” to something different. At this point, ChatGPT gave me “creation’s joy brims” again, leading me to prompt even more precisely. Please rewrite “creation’s joy brims” and don’t use the words “creation’s” or “joy” or “swims” or “brims.” At this point I felt like I was talking to one of the Commodore 64s[13] we had in fifth grade. I received this final line, “In harmony, universe’s bliss glows dim.” Well, that was different. I realized it’s time to rewrite the poem myself, taking some of the suggestions, phrases, and words from ChatGPT, but putting more of myself into itself.

Sun’s kiss, moon’s embrace,

Wind’s whisper, God’s Grace,

Veiled step of cosmic union,

In harmony, breathe communion Here are the transformations I made. Line 1: I changed “golden” to “soft” and “soft” to “mild” in the first line to add alliteration (s’s in the first half; m’s in the second) and simplify the language. And then when I tried to sing it as a meditation, I removed both “soft” and “mile.” Line 2: I changed “Wind whispers” to “Wind’s whisper,” mostly because I misread “wind whispers” as “wind’s whisper,” which emphasizes the living nature of Francis’s earth; and “rain” to “God” and capitalized “Grace.” After all, this is a religious meditation, and Francis is a saint. Line 3: This line made me think of Teilhard de Chardin’s Hymn of the Universe,[14] which led to the greatest change from the original. Inspired by Teilhard, I transformed this line to “Veiled step of cosmic communion.” Line 4 now became: “In harmony, breath union,” which is okay, but maybe still needs some work. Then, I began looking the whole poem as a meditative song, changing “breath” to “breathe” and switching “communion” and “union.” I ended up with a poem much different than I began with, one that remains true to St. Francis’s original poem.

Ultimately, ChatGPT seems extremely convenient, somewhat foolproof, particularly accessible, and dependable. Because ChatGPT use is not a directive of my school district, it is noncoercive, and if I were to add it to my curriculum as part of an improvisation, composition, or songwriting session, I would make its use optional to avoid coercive use. Repairability is beyond the reach of teachers and students. Will ChatGPT become buggier and unusable over time, much like MySpace after its replacement by Facebook? I suspect it is likely, especially as machine learning tools become ubiquitous. The recent history of technological development has been a story of commodification of once free services.

Additionally, when analyzing an educational tool’s conviviality, accessibility ought also to be considered in relation to disability. I was particularly interested in ChatGPT’s accessibility, as a text-heavy tool, to my students who have reading related disabilities. A full analysis of this is best done by individuals with particular disabilities as they interact with it. As an improvising musician and person with dyslexia from childhood, I am interested in the ways machine learning tools aid the creative process, and as such, was drawn to a paper by Botchu, Iyengar, and Botchu (2023), who suggested that ChatGPT assists as a learning tool, supporting creativity and engagement, offering other supports, and reinforcing inclusion. However, they also indicate that ChatGPT’s interaction mode instigated frustration and showed a lack of responsiveness for students with dyslexia. As I long ago developed techniques for working around my dyslexia, learned in elementary school in the 1980s, I recognized some of my own misreadings as both limitations and openings of the text to rewrite into the process my own self, prompted by the text as I understood it. Machine learning tools do not offer an intelligence to misunderstand or offend.

Ultimately, in using ChatGPT to write this poem, I met the limits of the intelligence of this AI tool. But limitations are not always a bad thing—fans of the guitar do not listen to guitar because its sonic potentials are unlimited. Similarly, the creative use of ChatGPT is not necessarily negligible just because it has limits. Machine learning tools are often called AI, but they are not truly intelligent in the ways we humans commonly use the term. They are powerful tools, but not consciously intelligent. As Li, He, and Guo (2021) wrote, “All of these tools are unconscious” (177) but ought rather to be considered “the external extension of human intelligences” (177). As an external extension of my religious intelligence, ChatGPT served as inspiration, but could have become a barrier to creation. As a result, teachers incorporating ChatGPT for student creation, improvisation, composition, and songwriting must be aware of their students’ abilities and decide if barriers are likely to become challenges that inspire greater creativity, or barriers that stop them from continuing. Similarly in music improvisation pedagogy, free improvisation prompts, pre-set chord changes, riffs, and the like can present challenges and affordances. Like music improvisation prompts, machine learning tools can inspire or obstruct agency.

Jacob: One of the consequences of emerging technology is inconsistent outcomes. However, the quality of interaction with ChatGPT often depends on the strategies employed by the user. At the same time ChatGPT demonstrates the ability to respond to a wide range of instructions and perform most tasks that are clearly articulated and patiently iterated. Today’s AI tools already support multimodal interactions across text, voice, video, music, and digital imagery. In my personal experience, ChatGPT and other AI tools score high marks in this category, broadly. However, effective responses usually require multiple attempts, even when using literature supported practices in prompt engineering (Table 1), which is the process of designing and refining prompts to get more interesting and useful outputs from AI, mirroring iterative processes that humans experience in art making, writing, poetry, and other creative tasks (du Sautoy 2019). ChatGPT is a general model designed to cater to diverse requests, yet it produces individualized responses, meaning the same input can yield different outputs each time it is queried. Currently, this is a reality that those who elect to use ChatGPT must accept—that the inherent variability and occasional shortcomings are part of the process. Despite this, with patience and varied prompting strategies, ChatGPT can handle complex tasks, such as programming, debugging code, developing websites, searching the internet for up-to-date information, synthesizing complex theories, translating languages, and others.

Techniques like zero-shot prompting (where no examples are given), few-shot prompting (where a few examples are given), reflexivity, and step-by-step instructions can significantly enhance the quality of outputs (Kojima et al. 2023; Shinn et al. 2023). Lately, I always try to directly communicate who I am and what I am trying to do with a lot of detail. Providing ChatGPT with multiple examples of effective writing also helps. For instance, in the ChatGPT generated Music Educators Journal manuscript, I provided examples of my academic writing to refer to in terms of style, tone, and even philosophical underpinning. I also asked it to reflect on the quality of its output and to consider ways to improve it. More recently, I have started to invite ChatGPT to ask me questions regarding my intended outcome to bridge that gap between my communication skill and its ability to understand my hopes. It is also useful, although the reasons are not clear, to ask it to think “step by step” as it executes its next task. Using these tools is an exercise of trial and error. Additionally, it is important to note that different tools within the AI landscape are suited for different tasks; for instance, Claude 3.5 Sonnet is noted for producing more human-like writing, and ChatGPT is known to be capable of producing lesson plans and other worksheets for teachers to use in their classrooms (Mollick 2023).

The process of using ChatGPT effectively often comes down to crafting the best possible questions or requests. Users are encouraged to seek self-improvement through certain lenses, ask clarifying questions, and iterate on prompts to refine the outputs. This iterative process can yield different results depending on the time and context, making the final output the best possible response at that moment. Although ChatGPT is not infallible, it is highly flexible and capable of continual improvement.

GPT-4 and similar tools are designed to be user-friendly and amiable, but this can lead to issues like “hallucinations,” where the model generates incorrect information with high confidence (Weiser and Schweber 2023). Despite these challenges, these tools are on a path of continual improvement. The key to effective use lies in the user understanding its strengths and weaknesses, using effective prompting strategies, and being patient with the iterative process of refining outputs. Furthermore, as AI technology continues to evolve, its applications and reliability are expected to improve. For the time being, consider the following strategies (Table 1) to support the repairability of ChatGPT.

| Strategy | Description | Example |

| Iterative

Refinement |

Make multiple attempts to refine outputs, similar to human creative processes. | “Let’s improve this response. Can you make it more concise while maintaining key points?” |

| Contextual Priming | Provide detailed self-introduction, goals, and writing examples. | “I’m a music teacher writing an academic paper. Here’s an example of my writing style: [insert sample]” |

| Reflexivity | Ask the AI to reflect on its output and suggest improvements. | “How could you improve this response? What aspects could be clearer or more detailed?” |

| Interactive Dialogue | Engage in a back-and-forth, inviting the AI to ask clarifying questions. | “Before you proceed, do you need any additional information from me to better understand the task?” |

| Structured Thinking | Request step-by-step thinking or specific approaches to problem-solving. | “Please think through this problem step by step, explaining your reasoning at each stage.” |

| Few-shot Prompting | Utilize zero-shot, few-shot, and other prompting methods to guide responses. | “Here are two examples of how to solve similar problems. Now, solve this new problem using the same approach.” |

Table 1: Prompting strategies for repairability.

Risks in the near future go beyond ecological concerns. Artistic endeavors once thought to be unique to humanity can now likely be approached by existing AI tools (du Sautoy 2019). A single ChatGPT subscription also gives users access to Sora, an AI video generation model, higher limits for image generation, and advanced reasoning models like o3, o4-mini, and deep research. As such, the unrelenting stream of new model releases will shape events in micro and macro political ways (Müller 2016). It is inevitable that AI will become more ubiquitous in our cultures, classrooms, and music technologies. As this happens, we may find ourselves captured in a strong gravitational pull towards posthuman ontologies, epistemologies, methodologies, and pedagogies through the lens of a neoliberal product (Braidotti 2013). The implications of such a shift are unknown, although some researchers have provided warnings about job displacement (Misra and Chandwar 2023), privacy concerns (Snoswell et al. 2023), academic integrity issues (Hosseini, Resnick, and Holmes 2023; Patil et al. 2023), impacts on critical thinking (Ayinde et al. 2023), and intellectual property concerns (Onal-Kulavuz and Onal 2023). Furthermore, researchers have concerns that extend to data accuracy and transparency (Morjaria et al. 2023; Marino et al. 2023; Su and Yang 2023), misinformation risks (Misra and Chandwar 2023), as well as diversity and equity impacts (Hamilton et al. 2023).

Agency

Agency is understood as the capacity and desire of students for accomplishing a particular educational result. For many, agency has long been a goal of music education. For instance, John Dewey’s (1897) Pedagogic Creed formalized, for progressive educators, that education begin with student interest, and the cultivating of their agency: “I believe that the question of method is ultimately reducible to the question of the order of development of the child’s powers and interests.… I believe that the active side precedes the passive in the development of the child-nature; that expression comes before conscious impression” (n.p.). Because of the centrality of Deweyan agency in music education scholarship, even today, analyzing a technological tool for its ability to cultivate agency is important. This parallels how other tools have been analyzed throughout music education history.

Daniel: I was first introduced to ChatGPT by students Tucker and Brian (pseudonyms), following their interest, in Guitar 2 as we explored, in an informal mode, options for songwriting. They were trying to write songs to each other through this program, which they had pulled onto the SmartBoard. They were able to create poems, and later have the program suggest guitar chords. And this provided a fun few minutes, while the two young men tried to express their own appreciation for one another’s friendship through a machine’s words.

Here is an example using https://chatgpt.com/. Prompt: Write a song, with chords, expressing my friendship with Tucker.

Verse 1: [G] Sitting under starry skies, [C] reminiscing days gone by [G] Tucker, you’ve been by my side, [D] through laughter, tears, and time [Em] From childhood games to late-night talks, [C] you’ve always been my rock [Am] Through thick and thin, you’ve been my [D] guide

Chorus: [G] Oh, Tucker, my friend, [C] you’re my brother ’til the end [G] Through the highs and lows, [D] you’re the one I chose [Em] Forever in my heart, [C] we’ll never be apart [Am] Together through life’s bends and [D] flows

Verse 2: [G] From bike rides down the avenue, [C] to secrets shared between me and you [G] Through every storm we weathered through, [D] our bond forever true [Em] Through growing pains and victories won, [C] we’ve seen it all, and still we’re strong [Am] Together hand in hand, [D] our friendship carries on

Chorus: [G] Oh, Tucker, my friend, [C] you’re my brother ’til the end [G] Through the highs and lows, [D] you’re the one I chose [Em] Forever in my heart, [C] we’ll never be apart [Am] Together through life’s bends and [D] flows

Bridge: [Em] And when the days grow long and [C] shadows start to fall [Am] I know that you’ll be there, [D] you’ll answer when I call [Em] Our friendship’s like a song, [C] echoing through the years [Am] Through laughter, love, and tears, [D] we’ll conquer all our fears

Chorus: [G] Oh, Tucker, my friend, [C] you’re my brother ’til the end [G] Through the highs and lows, [D] you’re the one I chose [Em] Forever in my heart, [C] we’ll never be apart [Am] Together through life’s bends and [D] flows

Outro: [G] So here’s to you, my friend, [C] this bond will never end [G] Through the years, through every trend, [D] you’re my forever friend [Em] Tucker, my friend, [C] I’ll love you ’til the end [Am] Forever and always, my [D] forever friend Brian then sang, in a low Central, PA country twang, his song to Tucker. The only chord not taught in Guitar 1 was A minor, which Brian looked up on the board. The two students did this for a few minutes and then moved on to learning guitar riffs on YouTube channels such as Marty Music. Did the song hit upon important points in their friendship? Did they indeed have highs and lows, and were there secrets shared while riding bikes down an avenue? Does Brian see Tucker as his guide? Perhaps or perhaps not. It is not very personal, and as Pope Francis suggested (in the introduction to this paper), it seems to have replaced the depth and personal storytelling of more genuine songwriting experiences with repetition.

The students could have personalized their songs by writing additional prompts, using this song as a jumping-off point. The hope was that by following students interest in ChatGPT, these students would have an opportunity to scaffold songwriting learning, confronting “sequentially more difficult” (Holster 2024, 5) aspects of songwriting by beginning with modifying a ChatGPT song on a topic of interest. But one long-known aspect of information-rich learning, which includes especially this type of machine learning, is decreased attention span. In the well-known Herbert Simon quote, “What information consumes is attention. A wealth of information means a poverty of attention” (Ratcliffe 2017). And this was true for these students who, after briefly generating a variety of songs to sing to one another, moved onto another task, which was more familiar and comfortable for them.

To what extent does ChatGPT promote agency? It is likely that a teacher engaging students in songwriting with ChatGPT would need to invest additional time to preparing students for a creative learning experience, as well as be prepared to adjust instruction in a variety of ways to make the creative experience agentic for students—to help students leave feeling more creative than when they first encountered ChatGPT as a songwriting tool. As such, teachers need to be prepared to push against the attention-span problem, which is a death knell for creative agency.

Jacob: My early interest in AI sparked curiosity that evolved significantly by the time I pursued my PhD in music education in 2018. Despite my advisors’ advice to focus on traditional methodologies, I directed my energy toward natural language processing (NLP) and algorithmic processes applied to methodologies and epistemologies. Initially, I worked with Python code, which ran in a linear, predefined manner. Over the last five to six years, however, advancements in NLP have progressively enhanced my agency as a user.

ChatGPT can be supportive for individuals struggling with articulating their thoughts clearly. Even when users type in gibberish or with numerous spelling errors, ChatGPT can understand and correct these communications, turning fragmented inputs into coherent text. This capability is particularly beneficial for those with dyslexia or other language-related challenges. ChatGPT also supports the visualization and clarification of thoughts and ideas by taking abstract concepts and translating them into structured narratives or visuals. Artists and designers can describe their concepts in rough sentences, and ChatGPT can help develop these ideas into detailed project plans, visual descriptions, images, and videos.

In music education, ChatGPT can be an agency-supportive tool for both students and educators. Students can use it to understand complex musical concepts, receive feedback on compositions, and explore different genres and historical contexts, while educators can create engaging lesson plans, design assessments, and provide personalized feedback, among dozens of other potential use cases. Although the capacity for new technologies to respond to diverse needs and goals can raise privacy concerns, a balance may be found where communities engage with AI in ways that respect shared values and support collective learning objectives. GPTs already have the potential to remember important facts about users when asked to do so, and context windows for user inputs are consistently increasing as models improve.

Although ChatGPT is undeniably useful for various tasks, the algorithms that we interact with are constantly evolving. Because of this reality, I am interested in exploring beyond Holster’s (2024) recommendations for using ChatGPT as a tool for teachers and students into near-future scenarios that address pressing sociopolitical and ecological issues. Systems of human and non-human interactions are evolving. For example, AI agents—autonomous systems capable of performing tasks and adapting independently—are expected to be broadly integrated into systems (Russell and Norvig 2020). In music education AI agents could take on many of a music teacher’s tasks, from automating instructional content and communication to personalizing learning experiences that adapt to individual students’ skill levels. This evolution emphasizes the need to consider AI’s sociopolitical and ecological impacts beyond its immediate utility, which for now remains a prompt for reflection on AI’s broader influences on our collective future.

Conclusions

Daniel: I remain reticent to adopt widespread use of ChatGPT or similar machine learning tools, ultimately, because of the ecological destruction caused by such tools. For me, having a livable earth on which to music is more important than adopting new technological tools, however convenient, accessible, or agentic. That said, as a middle school teacher, I will use this tool with students with disabilities to the extent that it helps them thrive. The ecological price-tag of our ecological waste is paid by future generations and communities living in the Global South. It is a privilege to experiment with this tool, but privilege is advantage and, in our capitalistic global system, results in disadvantage for others. The centralization of ChatGTP, as a concentration of power into the hands of the bourgeoise, leads to this tool’s ecological destruction—water waste, carbon waste, plastic waste, and e-waste for quarterly economic gains.

Due of the nature of machine learning tools I am reticent to grant them rights. Environmental movements, including the Deep Ecology Movement and the Animal Rights movements (see Shevock 2018), have long argued rights be extended to non-human biological organisms. Chimpanzees, lab rats, and rainforests have each had little success in acquiring rights, despite arguably possessing some form of intrinsic, not manmade, intelligence. The AI movement’s adoption of terms that originated in environmental activism, like anthropocentrism community and place, is problematic. This adoption of terminology seems to further put power in the hands of humans—the bourgeoise in particular. In my assessment, AI is anthropocentric, as capitalism is human centered—it is tech developers, venture capitalists, and students in the Global North, especially in suburban school districts who are at the center of AI profit-making, marketing, consumerism, and pedagogy. Any centralized object of wealth is going to lead to greater injustice between urban and rural, and bourgeoisie and proletariat.

Additionally, my use of ChatGPT 3.5 in this study offered many instances of hallucination, not shared in this paper, such as giving obviously incorrect information, possibly based on 3.5 not having access to the internet. Internet access does not, however, eliminate hallucination, if that hallucination is embedded in the algorithm. And the hard limitations of the material on earth, treated as commodities since the beginning of the Industrial Revolution, means that machine learning tools cannot be made available to every human on earth, just as social media has not and cannot. Without global equity, impossible within capital economies, AI is yet another wasteful toy of the affluent Global North, at the expense of the survival of our kinfolk living in the Global South.

Jacob: While I agree with Daniel’s concerns regarding the ecological impact and centralization of power associated with ChatGPT, I believe that with responsible use and continuous improvements, these tools can offer significant benefits in educational contexts. The iterative nature of working with ChatGPT mirrors human creative processes, which can be seen as a valuable pedagogical tool rather than a drawback. Contextual priming, reflexivity, and step-by-step instructions can enhance the quality of interactions and outputs. Moreover, AI tools like ChatGPT can support individuals with language-related challenges, aid in complex task management, and foster community engagement through collaborative knowledge construction. Concurrently, individual users, stakeholders in education, and corporations should aim to balance the meaningful and quick adoption of these technologies with sustainable practices and ongoing efforts to reduce their environmental footprint.

For the meaningful adoption of AI in education to align with ethical and sustainable practices, regulatory oversight is essential. Currently, the lack of comprehensive regulations explicit to AI in education leaves significant gaps in accountability, risking misuse and over-dependence on corporate interests. Existing frameworks, such as the European Union’s AI Act and principles set forth by UNESCO, are beginning to shape ethical AI use (European Commission 2021; UNESCO 2021). However, broader, enforceable policies are needed globally to ensure that AI’s role in education upholds transparency, equity, and environmental responsibility. Educators, in turn, can lead by example: they should prioritize tools like Claude by Anthropic that disclose data collection practices, emphasize privacy protections, and advocate for technologies with low environmental impact. Furthermore, educators can cultivate critical AI literacy among students, empowering them to understand AI’s role in society and question its ethical implications. Educators can contribute to a foundation where AI serves the interests of learning communities, not just corporations, by actively supporting AI policies that protect collective values and sustainability.

[Jointly]: It is hoped that by employing this Model for Convivial Tools, music educators in PreK-University settings, as well as administrators, who too often find themselves drowning in an ocean of stuff, will use this model as they deliberate on adoption of new technological tools, including machine learning AI in music curricula. The Model for Convivial Tools offers our field rational criteria, where in previous decades we applied no criteria and had no reason to embrace or refuse any newly manufactured tool. The four areas for consideration—community, waste, convenient/foolproof/accessible/noncoercive/repairable/dependable, and agency—offer a set of conditions that can be met as we assess adoption in the future; they also illuminate conditions that, when unmet, substantiate our choice to reject certain new purchases and new tools, rather than continuing to devastate the waters of life and magnify local and global inequalities by participating in unreasoned consumerism. However, as we wrote this, we were thinking about additional criteria that might be considered. For instance, specific to machine learning tools, verification of inputted information might be an important aspect of the repairability of AI responses. This is the question Wikipedia encountered years ago[15]—how do we verify responsive communication offered by machine learning tools, so they do not metamorphose into something offensive, racist, classist, or otherwise destructive?

Ultimately, we are looking at developing this model in the future considering a major element, time. Historically, new technologies have been developed, within capitalism, in a state of diversity, which over time transmogrifies into a monopoly. Early in new technological tools’ development, adopters have a choice of which to adopt, and, subsequently, which not to adopt. As explored in this paper, the Model for Convivial Tools offers early adopters guidelines, which may influence which technological team—which engineers, owners, and marketers—will corner the market. We will then have had at least some say by the time that market becomes anticompetitive in its subsequent state of monopoly. Five years down the road, we likely will not have a choice of which machine learning tools to adopt, because of this. It will be too late to choose the teams that have valued community, agency, repairability, convenience, and waste-reduction, by for example using water-reuse systems that have been available since the development of nuclear power in the 1950s. But now, if we enter the early adoption process with a plan, we might be able to influence this from within the mouth of the capitalist beast, easing human and ecological misery now, while we work toward sustainable and agentic alternatives.

About the Authors

Blessed to be an Appalachian music education philosopher, Daniel J. Shevock’s scholarship blends music ecology, autoethnography, ecotheology and creativity. He is the author of two academic books published by Routledge, Eco-Literate Music Pedagogy (2017) and Music Lessons for a Living Planet: Ecomusicology for Young People (2025), peer-reviewed articles, chapters in edited books, a YouTube channel, and a blog at eco-literate.com, cultivating and critiquing human understandings of ideas including sustainability, place, culture, religion, localism, and poverty. He earned his degrees from Clarion University of Pennsylvania (BSEd 1997), Towson University (MS 2000), and Penn State (PhD 2015), and has taught students from pre-kindergarten to university levels in Maryland and Pennsylvania (USA). Dan is currently a middle school general music teacher (Grades 5-8) with the rural Keystone Central School District in PA and is an avid hiker, Christian, runner, vegetarian, and gardener.

Jacob Holster is an Assistant Teaching Professor of Music Education at Penn State University. He earned his M.M.E. from the University of North Carolina, Greensboro, and a Ph.D. from the University of Colorado, Boulder, focusing on the integration of artificial intelligence in teaching, learning, and research. Their research explores music teacher attrition, student motivation and well-being, and AI applications in music education, with publications in Update: Applications of Research in Music Education, Music Educators Journal, Psychology of Music, Arts Education Policy Review, Qualitative Research in Music Education, and Bulletin of the Council for Research in Music Education. They serve on the editorial board of Contributions to Music Education and are a faculty affiliate with the Penn State Institute for Computational Data Science. They teach courses in artificial intelligence, music teacher preparation, and quantitative research methods.

References

Ayinde, Lukman, Muhammad P. Wibowo, Ravuri B Bhargavi, and Faisal B. Emdad. 2023. ChatGPT as an important tool in organizational management: A review of the literature. Business Information Review 40 (3): 137–49. https://doi.org/10.1177/02663821231187991

Bates, Vincent C. 2014. Rethinking cosmopolitanism in music education. Action, Criticism, and Theory for Music Education 13 (1): 310–27. https://act.maydaygroup.org/articles/Bates13_1.pdf

Bates, Vincent C., and Daniel J. Shevock. 2020. The good, the bad, and the ugly of social media in music education. In The Oxford Handbook of Social Media and Music Learning, edited by Janice L. Waldron, Stephanie Horsley and Kari K. Veblen, 619–44 Oxford University Press.

Biswas, Som S. 2023. Letter to the editor: Potential use of ChatGPT in global warming. Annals of Biomedical Engineering 51 (6): 1126–27. https://doi.org/10.1007/s10439-023-03171-8

Botchu, Bhuvan, Karthikeyan P. Iyengar, and Rajesh Botchu. 2023. Letter to the editor: Can ChatGPT empower people with dyslexia? Disability and Rehabilitation: Assistive Technology 1–2 19 (5): 2131–32. https://doi.org/10.1080/17483107.2023.2256805

Braidotti, Rosi. 2013. The posthuman. Polity Press.

Castells, Manuel. 1996. The rise of the network society. Blackwell.

Dartmouth. n.d. Artificial intelligence (AI) coined at Dartmouth. Dartmouth College. https://home.dartmouth.edu/about/artificial-intelligence-ai-coined-dartmouth

De Silva, AHDB, RSP Ranasinghe, and MRM Rihan. 2023. Negative effects of integrating ChatGPT in higher education system concerning achieving resilience through digitalization, sustainability, and sectoral transformation. KDU Library: General Sir John Kotelawa Defence University, Sri Lanka. http://ir.kdu.ac.lk/handle/345/7345

Dewey, John. 1897. My pedagogic creed. E.L. Kellog & Co. https://archive.org/details/mypedagogiccree00dewegoog

Di Baldassarre, Giuliano, Niko Wanders, Amir AghaKouchak, Linda Kuil, Sally Rangecroft, Ted I. E. Veldkamp, Margaret Garcia, Pieter R. van Oel, Korbinian Breinl, and Anne F. Van Loon. 2018. Water shortages worsened by reservoir effects. Nature Sustainability 1 (11): 617–22. https://doi.org/10.1038/s41893-018-0159-0

du Sautoy, Marcus. 2019. The creativity code: Art and innovation in the age of AI. Harvard University Press.

European Commission. 2021. Proposal for a regulation of the European Parliament and of the Council laying down harmonized rules on artificial intelligence (Artificial Intelligence Act) and amending certain Union legislative acts. https://eur-lex.europa.eu/legal-con tent/EN/TXT/?uri=CELEX%3A52021PC0206

France, Eli, Jon Chun, and Katherine Elkins. 2023. Can Chat-GPT rap: Analyzing the ability of Chat-GPT to replicate and analyze the lyrical style of young thug. Poster presented at IPHS 300 AI for Humanity. https://digital.kenyon.edu/cgi/viewcontent.cgi?article=1046&context=dh_iphs_ai

George, A. Shaji, A. S. Hovan George, and A. S. Gabrio Martin. 2023. The environmental impact of AI: A case study of water consumption by ChatGPT. Partners Universal International Innovation Journal 1 (2): 91–104.

Goodlad, Lauren M. E. 2023. Editor’s introduction: Humanities in the loop. Critical AI 1 (1–2). https://doi.org/10.1215/2834703X-10734016

Google. 2021. Google’s commitment to operating on 24/7 carbon-free energy by 2030. Google. https://sustainability.google/progress/energy

Grudin, Jonathan T. 2023. ChatGPT and chat history: Challenges for the new wave. Computer 56: 94–100

Hamilton, Laurie, Elliott, Deborah, Amy Quick, Susan Smith, and Vanessa Choplin. 2023. Exploring the use of AI in qualitative analysis: A comparative study of guaranteed income data. International Journal of Qualitative Methods 22. https://doi.org/10.1177/16094069231201504

Holster, Jacob. 2024. Augmenting music education through AI: Practical applications of ChatGPT. Music Educators Journal 110 (4): 36–42. https://doi.org/10.1177/00274321241255938

Hosseini, Mehdi, David B. Resnik, and Krista Holmes. 2023. The ethics of disclosing the use of artificial intelligence tools in writing scholarly manuscripts. Research Ethics 19 (4): 449–65. https://doi.org/10.1177/17470161231180449

Illich, Ivan. 1973. Tools for conviviality. Marion Boyars: London.

Illich, Ivan. 1986. H2O and the waters of forgetfulness. Marion Boyars.

Kojima, Takeshi, Shixiang Shane Gu, Machel Reid, Yutaka Matsuo, and Yusuke Iwasawa. 2023. Large language models are zero-shot reasoners. arXiv. https://ar5iv.org/pdf/2205.11916.pdf

Lacoste, Alexandre, Alexandra Luccioni, Victor Schmidt and Thomas Dandres. 2019. Quantifying the carbon emissions of machine learning. Machine Learning CO2 Impact Tool. https://mlco2.github.io/impact

Li, Deyi, Wen He, and Yike Guo. 2021. Why AI still doesn’t have consciousness? CAAI Transactions on Intelligence Technology 6: 175–79. http://doi.org/10.1049/cit2.12035

Lovable. 2024. Lovable.dev Documentation: Introduction. https://docs.lovable.dev/introduction

Marino, Matthew T., Elias Vasquez, Lisa Dieker, Jame Basham, and Jose Blackorby. 2023. The future of artificial intelligence in special education technology. Journal of Special Education Technology 38 (3): 404–16. https://doi.org/10.1177/01626434231165977

Matsunobu, Koji. 2013. Instrument-making as music-making: An ethnographic study of Shakuhachi students’ learning experiences. International Journal of Music Education 31 (2): 190–201. http://doi.org/10.1177/0255761413486858

Mollick, Ethan. 2023, July 15. How to use AI to do stuff: An opinionated guide. One Useful Thing. https://www.oneusefulthing.org/p/how-to-use-ai-to-do-stuff-an-opinionated

Morjaria, Leo, Levi Burns, Kenya Bracken, Quang N. Ngo, Mark Lee, Anthony J. Levinson, John Smith, Penelope Thompson, and Matthew Sibbald. 2023. Examining the threat of ChatGPT to the validity of short answer assessments in an undergraduate medical program. Journal of Medical Education and Curricular Development 10. https://doi.org/10.1177/23821205231204178

Misra, Debashish P., and Kunal Chandwar. 2023. ChatGPT, artificial intelligence and scientific writing: What authors, peer reviewers and editors should know. Journal of the Royal College of Physicians of Edinburgh 53 (2): 90–93. https://doi.org/10.1177/14782715231181023

Müller, Vincent C., ed. 2016. Ethics of artificial intelligence and robotics. Routledge.

Murphy, Patrick D. 2017. The media commons: Globalization and environmental discourses. University of Illinois Press.

NVIDIA. n.d. NVIDIA A100 Tensor Core GPU. NVIDIA. https://www.nvidia.com/en-us/data-center/a100

Onal, Selma, and Deniz Kulavuz-Onal. 2023. A cross-disciplinary examination of the instructional uses of ChatGPT in higher education. Journal of Educational Technology Systems. https://doi.org/10.1177/00472395231196532

OpenAI. 2023. ChatGPT. November 2023 version. Large language model. https://chat.openai.com/

Patil, Namrata S., Richard S. Huang, Charles B. Van Der Pol, and Natasha Larocque. 2023. Comparative performance of ChatGPT and bard in a text-based radiology knowledge assessment. Canadian Association of Radiologists Journal. https://doi.org/10.1177/08465371231193716

Raeini, Mohammad. 2023. The evolution of language models: From n-grams to LLMs, and beyond. SSRN. http://www.doi.org/10.2139/ssrn.4625356

Ratcliffe, Susan. 2017. Oxford essential quotations. 5th ed. Oxford University Press. https://www.oxfordreference.com/display/10.1093/acref/9780191843730.001.0001/q-oro-ed5-00019845

Roads, Curtis. 1996. The computer music tutorial. MIT Press

Russell, Stuart, and Peter Norvig. 2020. Artificial intelligence: A modern approach. 4th ed. Pearson.

Selwyn, Neil. 2021. Ed-tech within limits: Anticipating educational technology in times of environmental crisis. E-Learning and Digital Media 18 (5): 496–510. https://doi.org/10.1177/20427530211022951

Shevock, Daniel J. 2015. The possibility of eco-literate music pedagogy. TOPICS for Music Education Praxis 1: 1–23. http://topics.maydaygroup.org/2015/Shevock15.pdf

Shevock, Daniel J. 2018. Eco-literate music pedagogy. Routledge.

Shevock, Daniel J., and Vincent C. Bates. 2025. Music lessons for a living planet: Ecomusicology for young people. Routledge.