RAN JIANG

Western University (Canada)

June 2025

Published in Action, Criticism, and Theory for Music Education 24 (3): 53–78 [pdf]. https://doi.org/10.22176/act24.3.53

Abstract: Artificial Intelligence (AI) technology is reshaping the ways humans study and work across various disciplines. In the field of music, AI technology shows its possibility to empower individuals at diverse levels of musical knowledge in music creation, from novices to experts. In this article, I explore philosophical questions within both AI theories and music education, and specifically demonstrate two empirical instances of humans’ musical interactions with Generative AI technology. I argue that music education is facing an uncertain yet promising future at the confluence of AI theories and practical applications in music learning. Music educators must engage in deeper sociological and philosophical reflection on their pedagogical practices, integrating with AI technology and its implications to music learning with critical humility to consider various access possibilities to (potential) learners, to foster richer interactions between humans and computers, and to transcend existing teaching practices for sustainable and inclusive music practices.

Keywords: Artificial intelligence, philosophy, power of knowledge, human-machine interaction, agency, music collaboration

On December 11, 2023, Western University convened its inaugural “Q&A” (question and answer) session to discuss the burgeoning impact of artificial intelligence (AI) within the academic landscape. This session, led by Western’s Chief AI Officer Mark Daley, commenced with a stimulating prompt, “Use one word to describe AI.” Among all the audiences’ answers shown on the screen through real-time interaction, the words “exciting” and “scary” were most frequently used. This polarity in perception manifested the community’s diverse reception of AI’s proliferation since its emergence in recent years (Alto 2023). With the potential for wide-ranging integration into human endeavors in education, knowledge generation, and artistic creation, AI technology simultaneously sparks excitement and raises concerns about what the future may hold. Ge Wang, a music AI scientist from Stanford, used the word “AI FOMO” (Stanford Alumni 2023) to describe the fear of missing out experienced by musicians concerning AI’s potential threats to their artistic careers. With regard to music education, what possibilities might an AI-infused future hold for the field?

To understand AI’s impact on music education, it is necessary to review the underlying technology of AI, particularly how it synthesizes and produces knowledge, and can raise philosophical concerns. In this article, I begin with a theoretical introduction to the use of AI technology in music education, followed by an overview of AI theories, discussing this technology’s development and its aim to imitate human thinking and learning. I then explore the function of Generative AI models, focusing on their ability to perform creative tasks through neural networks in response to human prompts. Subsequently, I present two instances of building and using music AI models from a music education teacher-researcher’s perspective: (a) a research participant’s recent work on developing a music composition AI model; and (b) my own experience using another music AI model to generate a music excerpt. Through these examples, I demonstrate how the use of Generative AI is blurring the barriers for individuals who can be excluded by traditional, teacher-directed, knowledge reproduction-oriented music education practices (Smith and Hendricks 2022; Walzer 2024).

Consequently, I address the ethical considerations that humans can encounter while using AI models for music making. I then anticipate AI technology evolving as an iterative musical instrument that empowers both its developers and users through its input and output processes, which transcend traditional music learning frameworks (Dahlstedt 2021). I conclude this article by discussing the possibilities of expanding musical collaborations between musicians and AI scientists as well as sparking the combined technological and artistic interests of a wider population. I advocate that music educators rethink their teaching philosophies and traditional discourses, aiming for students to contribute actively to music creation alongside evolving AI technology, rather than perpetuating traditional and hierarchical power structures that limit students’ musical and learning agency (Smith and Hendricks 2022).

Literature on AI and Music

Derived from the overlapping interest in music and computer science, AI technology is commonly considered a tool to assist music learning and to facilitate music creation. In the field of composition, for example, researchers examined how the use of AI tools may change composers’ working habits through their interactions with machines (Cope 2021; Dahlstedt 2021; Franchi 2013; Louie et al. 2020). In music education, the research on the use of AI has been predominantly focused on students’ learning facilitated by AI software, both inside and outside the classroom. For example, Addessi and Pachet (2005) explored how kindergarten children interact with the AI-reflective music system Continuator to informally engage in music improvisation and collaboration. Regarding adult learning, Louie et al. (2020) investigated how amateur music learners develop their composition skills, self-efficacy, and music ownership through collaboration with AI-steering tools. Ventura (2019, 2022) investigated how two different AI composition learning software programs assisted students with and without dyslexia in learning bass line writing and visualizing composition. Ventura (2022) aimed to enhance inclusive music learning and promote students’ capacity for developing their agency “without making them feel inferior” (24) and “increasing [dyslexic students’] independence in a supportive environment” (15). In addition, Tsuchiya and Kitahara (2019) explored the effectiveness of an algorithmic methodology in non-notation-based music representation to support amateur musicians’ compositions. Their research demonstrated possibilities for transcending traditional music learning through human-computer interaction with AI technology. While these research studies showed how humans in different age groups with diverse music backgrounds can learn and create music more effectively with AI as a learning assistant, little is known regarding what implications those studies bring to music education from philosophical perspectives.

Philosophical Encounters with Music Education and Technology

The aforementioned technological advances and their applications necessitate a critical philosophical inquiry into the role of AI in music education. Philosophy, argued by Bowman and Frega (2012a), “often challenges and subverts habitual thought processes, processes that are familiar, reassuring, and consoling. Philosophical inquiry works, when and if it does, by generating conceptual tensions that may initially involve confusion and discomfort” (5–6). Similarly, Postman and Weingartner (1969) appealed for teachers to proactively review their roles in education and confront any “future shock[s]” (14) in this rapidly changing world rather than reproduce the heritage of human knowledge without any adaptations to unknown possibilities. Within philosophical discussions on music technology education, Walzer (2024) advocated for the integration of empirical practices in technological music from sociological perspectives, and emphasized an approach that is “imaginative, pertinent, and flexible” (75) in order to systematically address technology’s role within the complexities of cultural contexts in music education.

Given that music education is deeply intertwined with social, cultural, and political systems (Bowman and Frega 2012a), it prompts philosophical reflections about the potential role of AI technology. Raising people’s critical consciousness in times of change requires not only adaptation to reality but also active participation in (re)shaping it (Freire 1974). Music educators would benefit from reflecting on how their practices can support this transformative engagement. In the era of AI rapidly gaining recognition in various fields, this paper poses the question: How might music educators philosophically consider the role of AI in mediating music learning? Investigating how AI can address access issues previously overlooked in music education allows educators to reflect on their pedagogical interactions, thereby enhancing their teaching toward a more creative and sustainable future (Bowman and Frega 2012b; Dahlstedt 2021; Smith and Hendricks 2022; Walzer 2024).

A Background on AI Theory

This section focuses on multidisciplinary discussions of AI from theoretical and philosophical perspectives. By synthesizing AI-related literature, I examine the technologies and associated limitations in learning and knowledge creation. These capabilities and limitations show both similarities and differences compared to human learning, which can provide preliminary implications for the field of music education.

The concept of AI was first proposed by Alan Turing (1950) with a philosophical question, “Can machines think?” (433). Over subsequent decades, Turing’s inquiry has expanded into various fields, prompting research into both theoretical underpinnings and practical applications of AI, encompassing disciplines including psychology, linguistics, mathematics, and computer science (Boden 1990; Lakoff 1987; McEnery and Wilson 1996; Morse et al. 2011). The current philosophical discussions on AI learning concentrate on neuroscience and cognitive science, which examine the technological challenges in implementing AI systems. For example, Besold (2013) elaborated on how computers have been evolving in recent decades to “think” and process knowledge like human beings. The corresponding criterion stems from the Turing Test,[1] which has challenged both humans’ and computers’ learning capacities for decades. Besold (2013) also explained that AI models are programmed with algorithms that interact with the environment, enabling them to meet the Turing Test criterion, which is to understand, synthesize, reason, and ultimately generate knowledge.

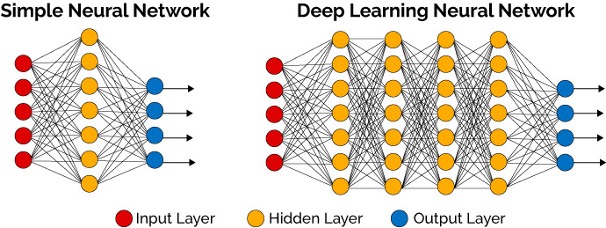

AI systems are designed to execute tasks with logical precision and to provide solutions for problems with various complexities, employing methods grounded in rationality (Besold 2013; Franchi 2013; Omohundro 2014). Regarding training AI for creative tasks, Cope (2021) elaborated that a deep learning neural network embedded with algorithms serves as the primary mechanism for Generative AI to process inputs and generate outputs. Illustrated in Figure 1 (The Data Scientist n.d.), Generative AI begins with a comprehensive dataset inputted by AI scientists that forms the input layer of the AI model. Then, different algorithms embedded in neural network frameworks are utilized to recognize and process the dataset’s underlying patterns. Through one or multiple hidden layers of neuron connections run by algorithms, AI goes through intricate learning procedures not fully disclosed to humans (Cope 2021; Dahlstedt 2021). Following this extensive processing, parameter adjustments, and reflection to “mimic human intelligence” (Cope 2021, 170), Generative AI presents the synthesized information as responses to users’ prompts. Consequently, Generative AI’s ability to create information is contingent upon the breadth of human knowledge pre-embedded in its initial database, as well as through the corresponding algorithms used in the hidden layer(s). This database’s scope is an initial and defining factor in training the AI’s creative capabilities within specific fields (Cope 2021; Dahlstedt 2021).

Figure 1: Simple neural network vs deep learning neural network (The Data Scientist n.d., also cited in Cope 2021, 171)

Figure 1: Simple neural network vs deep learning neural network (The Data Scientist n.d., also cited in Cope 2021, 171)

Exploring the potential of AI to foster inspiration, Freed (2013) examined introspection as “the basis of phenomenology” (169) and its significant influence on thought and ideation. However, the nuances of human experience pose challenges for AI training, as the latter requires precise and detailed data descriptions. On the topic of AI as a tool for enhancing learning, Ness et al. (2022) drew upon Vygotsky’s (1962) constructivism to emphasize the critical role of communication in the classroom, with knowledge constructed between teachers and students. They asserted that experience and cognition are not only interactive throughout the learning process but also transcend basic knowledge transfer. Accordingly, individuals’ meaningful cognitive construction and articulation of thoughts are predicated on engagement with their current environment (Stanford Alumni 2023). Therefore, since Generative AI’s capacity for knowledge synthesis is constrained by its fixed dataset, it is unlikely that AI independently generates real-time new knowledge based on experience, as humans do (Ness et al. 2022). Additionally, due to its opaque learning process, Generative AI may produce hallucinated information in response to users’ prompts, which can cause ethical issues if it is used by humans without questioning (Alto 2023). To identify and avoid hallucinations, users must exercise caution and critical thinking when interacting with Generative AI.

Empirical Practices with Music AI

This section discusses two cases in which human musical endeavors intersect with Generative AI. The first case demonstrates a case study in which a research participant developing a music AI model for music composition. The second case draws from an autoethnographic perspective of using music AI software for music creation. By synthesizing and comparing both cases, in this section I aim to provide a contextual background for the later sections that expound ethical discussions and implications for music education.

Case One: Building a Music AI Model

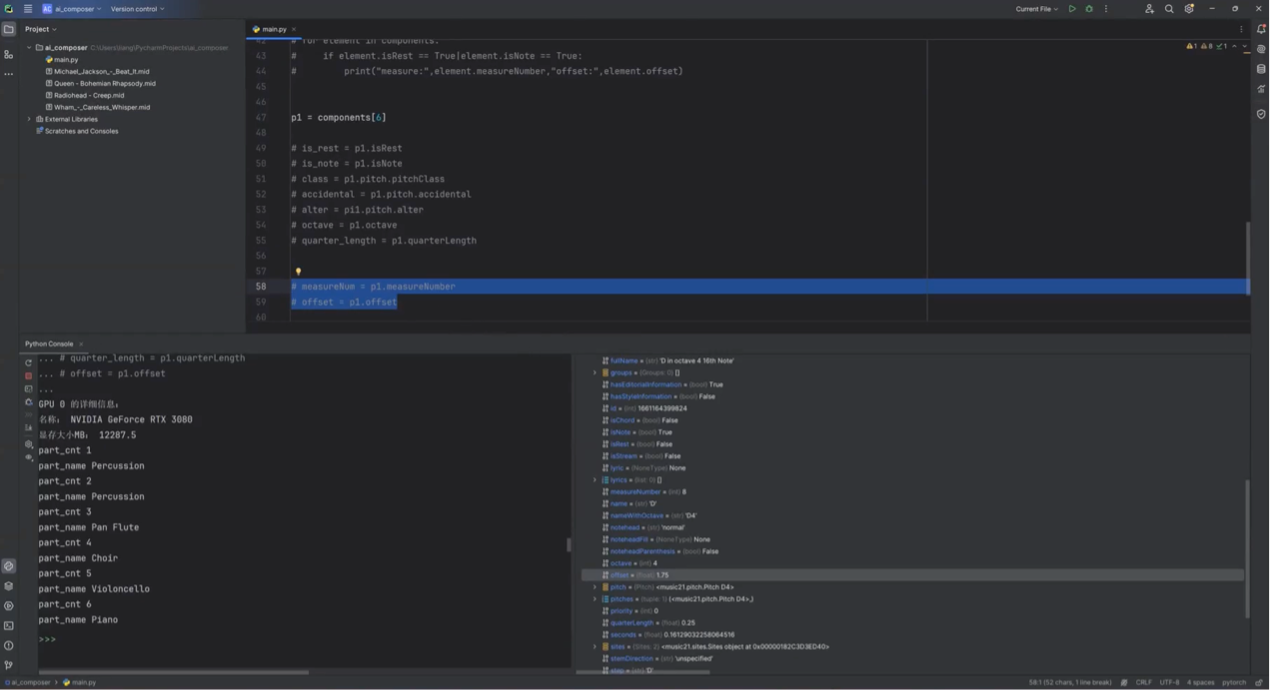

The first example involves my current research study on learning AI composition.[2] In this case study, the research participant, whom I refer to as Q, is building a Generative AI model for music composition. Instead of adopting commercially available AI composition software, Q read through related programming projects on GitHub,[3] experimented with multiple melody isolation tools to decode musical data (GitHub n.d.) and selected a specific music database for AI training. Q’s learning process in building their music AI model mainly included selecting MIDI files from their preferred songs, using a deep learning framework called PyTorch to convert the MIDI files to recognized format such as notations, using a recurrent neural network (RNN) algorithm in one of the hidden layers for sequence generation, and training the model with the Adam optimization algorithm. At the current stage, this customized AI model has preliminarily generated a full score of Q’s selection of a song and categorized the notation into corresponding parameters, such as measure numbers, notes, rests, and pitches (see Figures 2 and 3). By capturing these critical parameters from a specific number of songs, a customized music database based on Q’s preferences was developed.

Figure 2: A screenshot of Q’s AI model: turning the MIDI of Radiohead’s “Creep” into notation

Figure 2: A screenshot of Q’s AI model: turning the MIDI of Radiohead’s “Creep” into notation

Figure 3: A screenshot of Q’s AI model: Categorizing notation of Creep with programming parameters

Figure 3: A screenshot of Q’s AI model: Categorizing notation of Creep with programming parameters

Q’s purpose in building this AI model was to explore alternative ways of engaging with music composition that align with their personal interests and background in information technology. As an information technologist and rock fan with limited formal music training, Q previously lacked the expertise to compose music in notation-based methods. However, through the process of developing a music AI model and integrating a music database, as well as seeking suitable voice isolation tools for transcription on GitHub, the ability to transcribe music is no longer an obstacle for Q.

Case Two: Using a Music AI Model

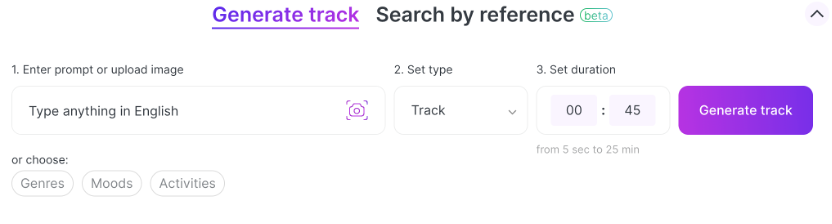

The second example is a music excerpt (Mubert 2024) that I generated using the music AI website Mubert (Mubert n.d.a). Mubert offers users opportunities to make music by inputting either text or an image prompt that describes desired musical characteristics. My prompt to Mubert was “An R&B song about a lazy cat” with a duration of 45 seconds. As a result, Mubert generated a piece that embodied a laid-back R&B rhythm pattern, aligning fairly well with the prompt from my perspective.

As a native Chinese person who grew up in China, R&B music was a genre that was not a part of my social context. Additionally, since my music training was predominantly Western classical oriented, I could not determine if my generated Lazy Cat excerpt authentically represented the features of R&B culture or its subgenres. However, without learning to write R&B drum patterns or using composition software on my computer, I became a co-creator of an R&B song excerpt using AI within a minute.

My purpose in engaging with Mubert was to explore how AI can facilitate access to music creation for individuals like me, who may not have the background or skills to compose in certain genres. Having my cat by my side while exploring Mubert, I was curious to see how this music AI tool could connect the idea of “a lazy cat” to R&B music. While the generated excerpt may not be a perfect representation of R&B, it nonetheless allowed me to engage with the genre in a way that would not have been possible through other means.

The two examples show a similarity: diverse AI models can be used or built to generate different content that fulfills users’ distinct needs from the input and the output ends, irrespective of the users’ inherent ability to fulfill those needs through other means of music making. Generative AI, by breaking the boundaries between users’ aspirations and their actual capabilities, becomes a transformative tool for music learning and making. However, both examples reveal issues related to two different individuals’ choices of access to music-making, as well as social and ethical considerations underlying practices. Synthesizing both examples, the following sections discuss the issues in detail.

Discussion Blurring the Knowledge Barriers

Access to knowledge and the barriers around the use of AI technology can inherently silence individuals from expressing their needs (Quigley and Smith 2021; Smith and Hendricks 2022). In music education, traditional performance-oriented approaches often enable socioeconomically and educationally privileged groups to access music activities that are compatible with their social backgrounds (Philpott and Spruce 2021; Regelski 2012; Smith and Hendricks 2022). Consequently, those with limited access to learning opportunities and resources may find themselves marginalized in music education (Quigley and Smith 2021). The barriers created by such a system can discourage historically marginalized groups from being musical and artistically expressive, inadvertently creating a class of individuals excluded from musical practices (Bell 2016).

Similar to Q’s experience in access through AI technology, Mubert emphasized the low access entry point that AI can bring for its users to engage in music making. Mubert’s website (n.d.a) states:

AI Music has no boundaries. The abilities of Artificial Intelligence and the creativity of music producers make possible a symbiotic relationship between humans and the algorithms. Millions of samples from hundreds of artists are used by Mubert to instantly generate royalty-free AI music, flawlessly suited to the content’s purpose. Collaboration like no other, humans and technology unite to bring the perfect sound every time.

This statement advocates for the mutual partnership between humans and technology, claiming AI’s potential to blur the existing barriers and amplify creative output. The infusion of various generative mechanisms and adjustments of musical parameters reconstruct musical knowledge, reshaped by the music AI scientists who have developed platforms, such as Mubert (Dahlstedt 2021). Such knowledge reconstruction allows users to co-create music with AI (Addessi and Pachet 2005; Bown 2021; Louie et al. 2020). As user-AI interactions evolve, the traditional barriers constructed by the knowledge system start to fade.

Generative AI assistance offers learners easier access to music-making by lowering longstanding barriers, such as technical skill requirements or formal training. In doing so, it has the potential to challenge the entrenched dichotomy in music education that privileges a musically “talented” few over the majority (Philpott and Spruce 2021; Regelski 2012), thereby disrupting traditional power dynamics. Within this skewed power structure, some music educators may find it more comfortable to share knowledge and collaborate with those they perceive as talented using an exclusive musical language established in the system (Regelski 2020; Smith and Hendricks 2022). In contrast, other learners who could hardly benefit from such educational practices may retreat into silence. With their musical needs and desires unvoiced, these students’ musical ideas and learning agency would go under-acknowledged and underdeveloped.

Music education researchers have discussed how the use of technology breaks the boundaries between formal and informal learning contexts and between the knowledge provided by teachers and acquired by learners themselves (Bell 2015; Chrysostomu 2017; Leong 2017; Quigley and Smith 2021). In the aforementioned two cases, AI technology acted as an empowering tool for both Q and myself, enabling us to engage with music learning and creation in ways that we could not achieve based on our backgrounds, regardless of our varied musical experiences and proficiencies (Bell 2015; Greher 2017; Pignato 2017).

AI as A Catalyst for Richer Communications

Being friends for almost 20 years, Q and I had been classmates through middle and high school for six years. Despite this long friendship, engaging in AI composition was the first time Q and I shared insights about music making in depth. Over the past two years, our discussions have covered a wide range of topics. These included selecting the most suitable music database for input and identifying the optimal training frameworks for vectorization. Q also navigated algorithm choices for training their AI model and worked through debugging based on its initial music generation. Beyond technical matters, our conversations extended to Q’s personal opinions on popular hits in the music market and the broader intersections among music, AI technology, and history. Throughout Q’s sustained engagement with the music AI model research, I, as a music educator, recognized the silent voice an individual like Q may have had regarding their music making aspirations. I once asked Q,[4]“You wouldn’t want to talk about music with me unless it’s related to AI, right?” Without any hesitation, Q responded with a burst of laughter, “That’s true.”

During one of our interviews, Q described that his limited formal music education in school and studio contexts failed to develop their interests in performance and meet their needs in music creation. In addition, Q previously considered me, a traditional music education practitioner, as an “expert” in music who might be unable to understand their perspective. Consequently, Q’s perception of my professional identity hindered their interest in communicating with me about music. This shows that the traditional music education paradigms incorporated with reproducing music heritages and inflexible adaptations to diverse perspectives could not offer inclusive access to learners like Q to develop their music potential (Quigley and Smith 2021; Smith and Hendricks 2022). Nonetheless, AI technology has become an access catalyst for Q, motivating their previously silent musical ambitions and providing them with a familiar knowledge platform to pursue their voice in musical expression. Although the use of some technology-oriented resources such as GitHub may not be accessible to music learners without technological devices, the internet, and relevant knowledge, in Q’s case, their expertise in the field of information technology enabled them to access music creation through their exploration with available resources. Such access is individual and flexible, and it can provide directions for the customized exploration of music to learners like Q.

A Sociological Lens and Musician’s Agency

As Tobias (2017) and Wright (2017) contended, examining the human-technology relationship through humanistic and sociological lenses may yield more nuanced and inclusive pedagogical insights in music education. In the field of AI development, researchers also emphasized the importance of acknowledging the sociocultural factors that influence musical representations in AI technology. Bown (2021) examined the interplay between music creators and AI training models through a sociological lens. They point out that the origin of music-making is deeply associated with one’s social, cultural, and political relations. Consequently, one’s identity and social class as well as the collective consciousness of their environment can shape their musical preferences. Bown (2021) suggested that for music AI models to create more nuanced and culturally rich music, their training must integrate these sociological elements into their databases.

For music educators and learners, engaging with music AI platforms such as Mubert could heighten enjoyment and inspiration within and beyond the classroom. However, as Gillespie (2014) pointed out, primarily engaging computational tools for expression can result in “subjecting human discourse and knowledge to these procedural logics that undergird all computation” (168). Relying on commercial AI software for music creation may misinterpret music genres and cultures due to its non-transparent music database, unobservable learning process, and hallucinations and misinterpretations of music genres (Cope 2021; Dahlstedt 2021). Additionally, unless equipped with programming skills and a basic understanding of AI model construction like Q, those without such expertise may subsequently lose their voice in music creation and their represented cultures. This implies a transformation in the locus of knowledge power within music education (Bell 2015). As mentioned previously, I could barely distinguish the authenticity of my generated Lazy Cat R&B excerpt from Mubert because of my cultural and educational background. When Mubert generated The Lazy Cat R&B track using minor 7th triplets between repeating A and G, Mubert and the developers on its back end were asserting their autonomy to a great extent in the musical representation of the idea of “a lazy cat.” As the idea provider, I could do nothing but accept this representation.

In Q’s case, although they could easily use music AI models such as Mubert to fulfill their needs in composition, Q insisted on building a music AI model on their own rather than using one. Q drew on an ethical consideration and explained to me, “When I build this model, I can control which songs I want to use as the datasets [as the input player].… All the music AI models are biased because those who build them are AI scientists and they must have biases. If I use someone else’s model to make music, the music output represents their intentions, not mine.… What I’m building is not comprehensive, but it can represent my biases, not the others.”

Responding to the ethical and copyright concerns relating to music AI models, Dahlstedt (2021) drew a parallel to musical instrument manufacturing. Historically, musical instruments have been crafted and polished by skilled makers and manufacturers alongside technological advancements (Park 2016). Much like instrument makers, music AI scientists invest considerable effort in training AI from technical to aesthetic nuances in data input. Unlike traditional instruments that need musicians’ interpretation and virtuosity as output after being crafted as the makers’ input (Wang 2016), AI demands dynamic interaction and continuous learning from its developers to serve users effectively through various potential interactions (Dahlstedt 2021). To comprehensively build a music AI model for inclusive music representation, not only is music knowledge needed from the developers’ end, but also musicians’ voices in guiding the model training from aesthetic perspectives.

AI’s Implications for Music Educators Music Knowledge Representation With(out) AI

Ethical ambiguities may persist in theorizing and applying AI technology in music education for decades. However, the implications that AI technology presents to music educators extend far beyond the technology itself. In the case of Q, their journey through AI and music did not lead them to uncritically celebrate technological advancement. Despite breaking down the boundaries and accessing music knowledge with AI, Q occasionally expressed their frustration regarding how the lack of formal music training hindered their music AI model building. Q also showed their skepticism against the notion that technological development could lead to a brighter future for humans. During one of our interviews, Q said, “To be honest, I don’t think the advancement of technology can make humans’ lives better.… In history, there was once a technological breakthrough in a field of mathematics that could have benefited a much wider population, but it was banned by the [de-identified] emperor.… I think what’s related to our happiness are sociology and politics, not technology.…”

Q’s insight into the restrictive power structures echoes Regelski’s (2020) critique of the Eurocentric Classical music system as a manifestation of an ideological and political power that constrains students’ musical engagement. Such power, represented by the “High Culture” (Regelski 2020, 28) aesthetic standard, is Western notation-based, result-driven, and oriented in knowledge reproduction; it pervades music education and influences pedagogical directions. Arguing that music educators should not perpetuate the existing knowledge hierarchy that fails to universally enrich students’ experiences, Regelski (2020) asserted: “Critical teachers need to use their reason to identify social, political, economic, and other ideological forces that influence them and their schools in directions opposed to the desirable curricular results needed if students are to be empowered to be effective agents of their own musical destinies” (33).

In recognizing students’ diverse needs, experiences, and interests in music making, music educators could consider subdividing their pedagogy into performance, composition, songwriting, and music production, all with and without the embedded features of AI. Addressing students’ cultural and individual nuances may not only facilitate a meaningful music reproduction but also provide interdisciplinary and more comprehensive learning experiences to students, thus enabling them to develop their agency. Subsequently, the students may be able to independently explore their ongoing music creation along with their future expertise and capabilities in and/or outside of music.

Ever since AI scientists began exploring ways of knowledge representation in music (Cope 1992; Smaill et al. 1994; Smith and Holland 1994; Westhead and Smaill 1994), the monopoly of musical knowledge by the musically privileged few has been challenged. This not only challenges the way that teachers sustainably and inclusively deliver knowledge to the next generations (Smith and Hendricks 2022), but also how students can be educated and self-educated to face the uncertain future (Postman and Weingartner 1969). Such a future might require teachers and students “to recognize change, [and] to be sensitive to problems caused by change” (Postman and Weingartner 1969, 3), to prepare for the jobs that have not yet existed (Camilleri 2022), and to recognize what they really want in the times of AI (Stanford Alumni 2023).

Facilitating Access to Music through AI

Drawing on the discussion about access, music educators may pose the following questions to themselves, “Could AI facilitate broader access to music for my students, and if so, what has been my role in their access thus far?” and “Do my pedagogical practices adequately empower students to realize their potential, with or without the aid of AI?” With regard to music creation, music AI models such as Mubert can serve as a pedagogical tool to facilitate students’ access to music-making and learning. For example, as shown in Figure 4, if students and teachers use Mubert for learning music creation (such as composition and songwriting), they can click the three available options, such as genres, moods, and activities, to explore how musical gestures are generated by the music AI model for music representation. Indeed, such music representation can raise ethical controversy in the music market, which will be discussed in the following section. However, using AI technology to broaden music access in and out of classrooms expands opportunities to include students who may have been silenced by traditional music teaching, and those who may have yet been exposed to music genres outside of their communities.

Figure 4: Mubert homepage-generate track (Mubert n.d.b)

Figure 4: Mubert homepage-generate track (Mubert n.d.b)

Developing Agency for Broader Musical Engagements

As users interact with music AI software like Mubert, critical listening ability is required to prevent them from believing that their “generated” music reflects their independent music capacity (Hein 2017). In the meantime, it also provides both music educators and learners an opportunity to introspect their cultural and social stances when integrating AI into their music teaching and learning. Depending on both music educators’ and learners’ expertise in music and technology, and to what extent AI features are embedded in their music tools, comprehensive communications and flexible practices are needed inside and outside of music classrooms.

In their study that discusses musicians’ agency, Dahlstedt (2021) suggested that AI users must critically engage with the artistic choices generated by AI. A lack of comprehensive musical insight and critical thinking may lead Generative AI to maintain a standardized mediocrity in its users’ musical outputs. Teachers and students might consider questions such as: When Mubert’s AI scientists trained their music AI models, what R&B music did they choose as the input layer? Did they consider the sub-genres of R&B from different areas and racial groups? How did they vectorize parameters similarly or differently for various genres of music? In what ways did they label each parameter for deep learning? What algorithms and neural network frameworks did they use to train and optimize their models? What are the unknown learning processes that AI scientists need to address throughout their training procedure for any music misrepresentation?

As advocated by Walzer (2024), “If the likelihood that musicians and technology specialists will cross paths increases, it makes sense to explore what music technology means from varied perspectives” (46). The evolution of Generative music AI increasingly necessitates skilled musicians to engage in its development, from input to output. In collaborating with AI on model training, composition, and performance, those with varying levels of formal musical training will have more opportunities to be offered fresh, dynamic avenues to examine their creative autonomy (Cosentino and Takanishi 2021). Thus, Dahlstedt (2021) argued that power is not transferred from one entity to another with AI’s mediation. Rather, it becomes a collective resource, having the potential to amplify the engagement with a wider group of music learners to construct a broader discourse and interaction with music.

Conclusion

Wang’s talk on music and AI (Stanford Alumni 2023) returned to human learning, addressing the joy of going through one’s learning process to fulfilling self-achievement rather than simply generating a music product that is needed. The development of Generative AI opens up new avenues for a wider demographic to actively and critically engage in music learning and creation which transcend conventional learning environments. This shift should prompt music educators to foster greater and deeper dialogue not only among people with diverse musical backgrounds but also between musicians and AI developers to enrich their agency by developing and using AI in more interactive, transparent, and ethical ways. By acknowledging the authority constructed in music knowledge and challenging the barriers it imposes, music educators are afforded a critical moment to scrutinize the prevailing limitations of the knowledge system and to reflect deeply on the impact of their pedagogy.

Echoing Turing’s (1950) vision, “We can only see a short distance ahead, but we can see plenty there that needs to be done” (460), the integration of AI into music and education opens a future of uncertainties and opportunities. Regarding the ongoing debates over the ethical use of AI, to what extent humans are distinct from computers, and what sociological issues were revealed with the development of music AI, humans across cultures, disciplines, and interests can take the opportunity to continue exploration of their learning to fulfill their needs in music, technology, and their lives. This uncertain future demands us to tread an open-ended journey with critical humility to consider various access possibilities to (potential) music learners, to foster richer interactions between humans and computers full of imaginations, and to transcend existing teaching practices for sustainable and inclusive music practices.

About the Author

Ran Jiang is a PhD candidate in Music Education at Western University, Canada, and a three-time award winner of the Ontario Graduate Scholarship (OGS). She is currently serving as the convenor for the Music Technology Special Interest Group for the International Society for Music Education (ISME) between 2024 and 2026, as well as the managing editor for the Journal of Music, Technology & Education.

References

Addessi, Anna Rita, and Francois Pachet. 2005. Young children confronting the Continuator, an interactive reflective musical system. Musicae Scientiae (10): 13–39. https://doi.org/10.1177/1029864906010001021

Alto, Valentina. 2023. Modern generative AI with ChatGPT and OpenAI models: Leverage the capabilities of OpenAI’s LLM for productivity and innovation with GPT3 and GPT4. 1st ed. Packt Publishing.

Bell, Adam Patrick. 2015. Can we afford these affordances? GarageBand and the double-edged sword of the digital audio workstation. Action, Criticism, and Theory for Music Education 14 (1): 44–65. act.maydaygroup.org/articles/Bell14_1.pdf

Bell, Adam Patrick. 2016. Toward the current: Democratic music teaching with music technology. In Giving voice to democracy in music education: Diversity and social justice, edited by Lisa C. DeLorenzo, 148–64. Routledge. https://doi.org/10.4324/9781315725628-16

Besold, Tarek Richard. 2013. Turing revisited: A cognitively-inspired decomposition. In Philosophy and theory of artificial intelligence, edited by Vincent C. Müller, 121–32. Springer. https://doi.org/10.1007/978-3-642-31674-6

Boden, Margaret A. 1990. Escaping from the Chinese room. In The philosophy of artificial intelligence, edited by Margaret A. Boden, 89–104. Oxford University Press.

Bowman, Wayne, and Ana Lucía Frega. 2012a. Introduction. In The Oxford handbook of philosophy in music education, edited by Wayne Bowman and Ana Lucía Frega, 3–14. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780195394733.013.0001

Bowman, Wayne, and Ana Lucía Frega. 2012b. What should the music education profession expect of philosophy? In The Oxford handbook of philosophy in music education, edited by Wayne Bowman and Ana Lucía Frega, 17–36. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780195394733.013.0002

Bown, Oliver. 2021. Sociocultural and design perspectives on AI-based music production: Why do we make music and what changes if AI makes it for us? In Handbook of artificial intelligence for music, edited by Eduardo Reck Miranda, 1–20. Springer. https://doi.org/10.1007/978-3-030-72116-9_1

Camilleri, Patrick. 2022. Planning at the edge of tomorrow: A structural interpretation of Maltese AI-related policies and the necessity for a disruption in education. In Artificial intelligence in higher education, edited by Prathamesh Padmakar Churi, Shubham Joshi, Mohamed Elhoseny, and Amina Omrane, 1st ed., 63–80. CRC Press. https://doi.org/10.1201/9781003184157-3

Chrysostomu, Smaragda. 2017. Human potential, technology, and music education. In The Oxford handbook of technology and music education, edited by S. Alex Ruthmann and Roger Mantie, 219–24. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199372133.013.20

Cope, David. 1992. Computer modelling of musical intelligence in EMI. Computer Music Journal 16 (2): 69–83. https://doi.org/10.2307/3680717

Cope, David. 2021. Music, artificial intelligence and neuroscience. In Handbook of artificial intelligence for music, edited by Eduardo Reck Miranda, 163–94. Springer. https://doi.org/10.1007/978-3-030-72116-9_7

Cosentino, Sarah, and Atsuo Takanishi. 2021. Human–robot musical interaction. In Handbook of artificial intelligence for music, edited by Eduardo Reck Miranda, 799–822. Springer. https://doi.org/10.1007/978-3-030-72116-9_28

Dahlstedt, Palle. 2021. Musicking with algorithms: Thoughts on artificial intelligence, creativity, and agency. In Handbook of artificial intelligence for music, edited by Eduardo Reck Miranda, 873–914. Springer. https://doi.org/10.1007/978-3-030-72116-9_31

Franchi, Stefano. 2013. The past, present, and future encounters between computation and the humanities. In Philosophy and theory of artificial intelligence, edited by Vincent C. Müller, 349–64. Springer. https://doi.org/10.1007/978-3-642-31674-6

Freed, Sam. 2013. Practical introspection as inspiration for AI. In Philosophy and theory of artificial intelligence, edited by Vincent C. Müller, 167–78. Springer. https://doi.org/10.1007/978-3-642-31674-6

Freire, Paulo. 1974. Education for critical consciousness. 2005 ed. Continuum.

Gillespie, Tarleton. 2014. The relevance of algorithms. In Media technologies: Essays on communication, materiality, and society, edited by Tarleton Gillespie, Pablo J. Boczkowski, and Kirsten A. Foot, 167–93. The MIT Press.

GitHub. n.d. Repository search results: Music21. GitHub. https://github.com/search?q=music21&type=repositories

Greher, Gena. 2017. On becoming musical: Technology, possibilities, and transformation. In The Oxford handbook of technology and music education, edited by S. Alex Ruthmann and Roger Mantie, 323–28. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199372133.013.30

Hein, Ethan. 2017. The promise and pitfalls of the digital studio. In The Oxford handbook of technology and music education, edited by S. Alex Ruthmann and Roger Mantie, 233–40. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199372133.013.22

Lakoff, George. 1987. Women, fire, and dangerous things: What categories reveal about the mind. University of Chicago Press.

Leong, Samuel. 2017. Prosumer learners and digital arts pedagogy. In The Oxford handbook of technology and music education, edited by S. Alex Ruthmann and Roger Mantie, 413–20. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199372133.013.39

Louie, Ryan, Andy Coenen, Cheng Zhi Huang, Michael Terry, and Carrie J. Cai. 2020. Novice-AI music co-creation via ai-steering tools for deep generative models. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1–13. ACM. https://doi.org/10.1145/3313831.3376739.

McEnery, Tony, and Andrew Wilson. 1996. Corpus linguistics. Edinburgh University Press.

Morse, Anthony F., Carlos Herrera, Robert Clowes, Alberto Montebelli, and Tom Ziemke. 2011. The role of robotic modelling in cognitive science. New Ideas in Psychology 29 (3): 312–24. https://doi.org/10.1016/j.newideapsych.2011.02.001

Mubert. 2024. An R&B song about a lazy cat (cd738712bdaf4933aade2877b1f70e83). Audio, 0:45. https://drive.google.com/file/d/1AxTLbYlc62A8AMYT8lJIxGctjGI3kGIZ/view?usp=drive_link

Mubert. n.d.a. AI music has no boundaries. https://mubert.com/#:~:text=has%20no%20boundaries-,The%20abilities,-of%20Artificial%20Intelligence

Mubert. n.d.b. https://mubert.com/render

Ness, James, Lolita Burrell, and David Frey. 2022. Truth in our ideas means the power to work: Implications of the intermediary of information technology in the classroom. In The frontlines of artificial intelligence ethics: Human-centric perspectives on technology’s advance, edited by Andrew J. Hampton and Jeanine A. DeFalco, 63–83. Routledge. https://doi.org/10.4324/9781003030928-7

Omohundro, Steve. 2014. Autonomous technology and the greater human good. Journal of Experimental & Theoretical Artificial Intelligence 26 (3): 303–15. https://doi.org/10.1080/0952813X.2014.895111

Park, Tae Hong. 2016. Instrument technology: Bones, tones, phones, and beyond. In The Routledge companion to music, technology, and education, edited by Andrew King, Evangelos Himonides, and S. Alex Ruthmann, 15–22. Taylor & Francis Group.

Philpott, Chris, and Gary Spruce. 2021. Structure and agency in music education. In The Routledge handbook to sociology of music education, edited by Ruth Wright, Panagiotis A. Kanellopoulos, and Patrick Schmidt, 288–99. Routledge.

Pignato, Joseph. 2017. Situating technology within and without music education. In The Oxford handbook of technology and music education, edited by S. Alex Ruthmann and Roger Mantie, 203–16. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199372133.013.19

Postman, Neil, and Charles Weingartner. 1969. Teaching as a subversive activity. Delacorte Press.

Quigley, Nicholas Patrick, and Tawnya D. Smith. 2021. The educational backgrounds of DIY musicians. Journal of Popular Music Education 5 (3): 397–417. https://doi.org/10.1386/jpme_00053_1

Regelski, Thomas A. 2012. Ethical dimensions of school-based music education. In The Oxford handbook of philosophy in music education, edited by Wayne Bowman and Ana Lucía Frega, 284–304. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780195394733.013.0016

Regelski, Thomas A. 2020. Tractate on critical theory and praxis: Implications for professionalizing music education. Action, Criticism, and Theory for Music Education 19 (1): 6–53. https://doi.org/10.22176/act19.1.6

Smaill, Alan, Geraint A. Wiggins, and Eduardo Miranda. 1994. Music representation – between the musician and the computer. In Music education: An artificial intelligence approach, edited by Matt Smith, Alan Smaill, and Geraint A. Wiggins, 108–19. Springer-Verlag.

Smith, Matt, and Simon Holland. 1994. Motive: The development of an AI tool for beginning melody composers. In Music education: An artificial intelligence approach, edited by Matt Smith, Alan Smaill, and Geraint A. Wiggins, 41–55. Springer-Verlag.

Smith, Tawnya D., and Karin S. Hendricks. 2022. Diversity, inclusion, and access. In The Oxford handbook of music performance, Volume 1, edited by Gary E. McPherson, 527–50. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780190056285.013.20

Stanford Alumni. 2023. Music & AI: What do we really want with Ge Wang. https://www.youtube.com/watch?v=FlccLavPQhk

The Data Scientist, n.d. Simple neural network vs deep learning neural network. Image. The Data Scientist. https://thedatascientist.com/what-deep-learning-is-and-isnt/

Tobias, Evan S. 2017. Re-situating technology in music education. In The Oxford handbook of technology and music education, edited by S. Alex Ruthmann and Roger Mantie, 291–308. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199372133.013.27

Tsuchiya, Yuichi, and Tetsuro Kitahara. 2019. A non-notewise melody editing method for supporting musically untrained people’s music composition. Journal of Creative Music Systems 3 (1): Article 1. https://doi.org/10.5920/jcms.624

Turing, A. M. 1950. Computing machinery and intelligence. Mind 59 (236): 433–60.

Ventura, Michele Della. 2019. Exploring the impact of artificial intelligence in music education to enhance the dyslexic student’s skills. In Learning technology for education challenges, edited by Lorna Uden, and Dario Liberona, 14–22. Springer International Publishing. https://doi.org/10.1007/978-3-030-20798-4_2

Ventura, Michele Della. 2022. Compensatory skill: The dyslexia’s key to functionally integrate strategies and technologies. In Learning technology for education challenges, edited by Lorna Uden, Dario Liberona, Galo Sanchez, and Sara Rodríguez-González, 153–63. Springer International Publishing. https://doi.org/10.1007/978-3-031-08890-2_12

Vygotsky, Lev. 1962. Thought and language. The MIT Press.

Walzer, Daniel. 2024. Leadership in music technology education: Philosophy, praxis, and pedagogy. Routledge.

Wang, Ge. 2016. The laptop orchestra. In The Routledge companion to music, technology, and education, edited by Andrew King, Evangelos Himonides, and S. Alex Ruthmann, 159–69. Taylor & Francis Group.

Westhead, Martin D., and Alan Smaill. 1994. In Music education: An artificial intelligence approach, edited by Matt Smith, Alan Smaill, and Geraint A. Wiggins, 157–70. Springer-Verlag.

Wright, Ruth. 2017. A sociological perspective on technology and music education. In The Oxford handbook of technology and music education, edited by S. Alex Ruthmann and Roger Mantie, 345–50. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780199372133.013.33

Notes

[1] According to Besold’s (2013) explanation, Turing Test refers to using computer to mimic human behaviours through human-computer text-based conversations without understanding the meanings of the texts. Meanwhile, a human agent serves as a judge to determine whether the output generated from the conversations were from a computer or a human. The assessment criteria of Turing Test are: “SubTuring I: Human Language Understanding; SubTuring II: Human Language Production; SubTuring III: Human Rationality; and SubTuring IV: Human Creativity” (Besold 2013, 128).

[2] This study has passed ethics through the Research Ethics Board at Western University in 2023. Consent was obtained from the research participant.

[3] GitHub (https://github.com/) is an Internet platform that shares open-source computer programming methods and projects among computer developers.

[4] All the quoted conversations between Q and me were in Mandarin Chinese. They are translated in English unless exceptions are specified.