EMMETT O’LEARY

Virginia Polytechnic Institute and State University (USA)

June 2025

Published in Action, Criticism, and Theory for Music Education 24 (3): 138–64 [pdf]. https://doi.org/10.22176/act24.3.138

Abstract: Artificial intelligence (AI) presents a unique technological quandary for music educators. Never before has a new tool been lauded and feared to the degree that AI is presently. As AI is an emerging influence in music teaching and learning, in this paper, I examine the past to inform critical action moving forward. Using prior literature in music and education technology integration, platform and media studies, and critical literacies, I explore possible consequences and considerations for music educators’ engagement with AI. I suggest that possible outcomes range from music educators largely ignoring AI and continuing with pedagogical practices uninterrupted, to broad disruptions in the way music educators do their work leading to a potential deskilling and deprofessionalization of our field. I suggest frameworks from critical curriculum and data literacies as means to guide future action.

Keywords: Artificial intelligence, algorithms, music technology

In May of 2024, Apple introduced its new iPad Pro through a streamed presentation. As they heralded the new iPad’s capabilities and features, they also unveiled a new ad campaign to entice consumers to purchase the device. The ad, entitled “Crush!”, featured an assortment of creative tools stacked in a pyramid between the plates of a giant hydraulic press. Over the next minute, viewers watch the press destroy a trumpet, piano, metronome, assorted paints and art supplies, and countless other tools of artists and creators. As the press returned to its original position, only the new iPad remained. Apple’s goal was likely to communicate that users could engage in any of these creative disciplines by purchasing the new iPad, but instead, they ignited a swift and fierce negative reaction. Technology journalists called the ad “tone deaf” and wrote articles with headlines that declare “Apple doesn’t understand why you use technology” (Lopatto 2024). Similarly, marketing professor Americus Reed, in an interview for Variety magazine, explained that the ad amplified “the fear that consumers have of tech and generative AI kind of destroying humanity, and there’s this uncertainty about how technology and social media are taking over our lives” (Spangler 2024, para. 4). While Apple apologized for the ad explaining it had “missed the mark” the ad and reaction to it manifested the broad feelings of trepidation and uncertainty that cloud AI in present discourse.

It is difficult to discuss AI without acknowledging the fear that accompanies it. For example, the title of Neil Selwyn’s (2019) book on the topic succinctly asks the question “Should robots replace teachers?” While the fear of AI potentially obviating or replacing the profession that I, and I anticipate most other authors in this issue, occupy, there are myriad possible outcomes ranging from AI improving the quality and nature of music educators’ work, to negative and deleterious effects on the music learning of children throughout the world.

The potential disruption and damage to music education by AI is perhaps sui generis, yet its potential to change music will largely be a function of the degree to which it is integrated into educators’ work. As it is difficult to predict the future, it is potentially instructive to examine the past to inform critical action moving forward. Given that AI integration is nascent in music education at present, the purpose of this paper is to draw on prior literature in music technology integration, platform and media studies, and critical literacies to explore possible consequences and considerations for music educators’ engagement with AI. I begin with a discussion of technology integration in music education, move to a summary of the critical literacies that could inform future work with AI, and then discuss issues related to AI’s influence in music teaching and learning, including potential training data, algorithmic influences, and ethical considerations.

Technology Integration

Music educators’ use of AI is foundationally a question of technology integration and behavior change. For AI to influence teachers’ work, they will need to adapt their existing professional behaviors in planning, instruction, assessment, or administration to include these new tools. Broad adoption of AI would contradict prior patterns of technology integration in music education showing that foundationally, teachers’ use of technology has been limited for instructional purposes (Bauer 2020; Dorfman 2015; O’Leary and Bannerman 2023) and traditional teaching practices are resilient (Tyack and Cuban 1995). In this section, I use a framework for understanding technology integration and previous research in music education technology integration to explore possible barriers and challenges to broad AI adoption in music education contexts.

To better understand technology integration, Moore (2014) offers a framework that positions behavior change as a central force and limitation in technology adoption. Moore explains “[a person’s attitude] toward technology adoption becomes significant any time we are introduced to products that require us to change our current mode of behavior or to modify other products and services we rely on” (21). He labels products that require substantive changes as discontinuous or disruptive innovations since they demand considerable effort in adoption and use. In contrast, “continuous” or “sustaining innovations” refers more to the use of technologies that require limited changes in users’ behaviors. Moore indicates that most industries “introduce discontinuous innovations only occasionally and with much trepidation” (23).

Prior research in technology integration suggests that educators’ attitudes toward technology are central to technology integration (Ertmer et al. 2012; Moore 2014; Tondeur et al. 2020). Moore (2014) posits that technology adoption generally begins with people enthusiastic about technology and willing to take on the extra work of using new tools; he labels this group as innovators and early adopters. They are often excited about the potential of the technology and take on the challenges of navigating tools where there may be few support resources available. Yet Moore (2014) suggests that a chasm exists between these technology enthusiasts and much of the population who change their behavior only if the technology has a clear benefit. For a technology to be widely used, it must cross the chasm to reach wide adoption. Viewed through Moore’s (2014) framework, the scope of AI’s use in music education will be dependent on the degree to which it presents capabilities so compelling that music educators, beyond the initial early adopters, are willing to adjust their behaviors.

Prior research suggests that few technologies requiring discontinuous change have crossed the chasm in music education (Bauer 2014). For example, music educators’ development of technology-based curricular offerings such as music production or music technology courses aligns closely with Moore’s (2014) framework. Researchers suggest that these offerings have been the product of innovative educators engaging in self-directed professional development and advocacy (Bauer 2014; Dammers 2010a, 2010b, 2012; Dorfman 2015, 2016; Testa 2021). These early adopters and innovators laid the groundwork for what Dorfman (2022) labeled “Technology-Based Music Education,” or music experiences where technology is the primary means through which students engage with creating, performing, or responding to music. Yet these courses have yet to cross the chasm and become as widely present as legacy curricular offerings (Elpus 2020) such as large ensembles and general music, contexts where technology has had more limited pedagogical integration (Bauer 2020). Additionally, preservice music educators report generally feeling unprepared to teach in these technology-based contexts and desire additional support in integrating technology into their instruction (Bannerman and O’Leary 2021; Haning 2016).

There are more examples where continuous changes have reached broader adoption by music educators. However, these largely include technology educators use for administrative aspects of their work such as communication, grading, and managing their music programs (Dorfman 2015). These areas are spaces where AI’s inclusion may be welcome. To this point, Selwyn (2019) suggested that AI could present a chance for teachers to do less work, allowing them to engage more deeply with core instructional responsibilities. He explains, “at best, these (AI) are technologies that are imagined as taking care of many of the ‘routines,’ ‘duties,’ and ‘heavy lifting’ associated with teaching,” allowing teachers to be “freed-up to engage in meaningful acts of leading, arranging, explaining, and inspiring” (135). Should AI promise increased efficiencies for teachers, it may be the case that many will choose to adopt the tools and change behaviors due to the promises of increased productivity and opportunities to focus on more rewarding aspects of their jobs. However, in this environment, the impact of AI will not be directly manifested in teachers’ interactions with students.

Emerging scholarship offers teachers guidance on potential uses of AI (Holster 2024), discusses the promise and challenges of AI in music education research (Rohwer 2024), and examines AI for planning instruction (Cooper 2024) and assessment (Shaw 2024). Yet, there is no evidence that AI’s influence has yet “crossed the chasm” to the broader profession. The present time may represent an inflection point where the critique and scholarship that comes during this period could inform and shape how AI might move beyond early adopters and innovators and shape how the broader population of music educators engage with AI.

The Need for Critical Literacies

Critical literacies facilitate a greater understanding of the technology teachers use and how it influences students’ musical experiences. Waldron’s (2018) work regarding online platforms and music education is instructive as she commented “educating ourselves on the complex intricacies of how the Web works is the first place to begin” (107). This knowledge is a precursor to critical inquiry and this notion aligns with broader ideas around critical digital literacies, which Jones and Hafner (2021) posit might help educators examine “how the affordances and constraints [of technology] advance particular ideologies or the agendas, or particular people” (135). This is particularly important as educational technology is often advertised hyperbolically (Selwyn 2022) through marketing and discourse infused with techno-solutionism (Morozov 2013) and technological determinism (Ruthmann et al. 2015). Without a framework through which to better understand technology in their work, teachers may be ill-prepared to make critical judgments about new technological developments like AI.

Digital literacies go beyond prior frameworks for technology integration such as the Technological, Pedagogical, and Content Knowledge (TPACK) and Substitution, Augmentation, Modification, and Redefinition (SAMR) models (Bauer 2014; O’Leary 2022). TPACK (Bauer 2013; Mishra and Koehler 2006) is an extension of Shulman’s (1986) pedagogical content knowledge and suggests that teachers need to employ specific technological understandings to meaningfully integrate technology into their work. The framework shows that teachers who have developed technological-pedagogical-content knowledge would use technology in ways that enhance and support students’ learning. Similarly, the SAMR model (Puentedura 2010) illustrates that teachers should evaluate a technology’s capacity to substitute for existing tools or augment, modify, or redefine a learning task. Through SAMR, teachers learn to examine how a technology may add new possibilities for student learning and engagement beyond merely replacing an existing tool or process. In both frameworks, the technology is largely taken at face value, and little concern is given to the broader consequences of a tool’s use.

SAMR and TPACK can help a teacher make pedagogical decisions but are insufficient to examine the critical ethical and curricular issues associated with AI. Music educators will need to expand the technology-based literacies they bring to their work to meet this challenge. To this point, Pangrazio and Selwyn (2023) discuss a “new literacies approach” that “recognizes the benefits of being able to unpack the politics of everyday texts and, most importantly, for these understandings and dispositions to inform people’s digital literacies” (83). As a way forward, I suggest two literacies that should inform educators’ engagements with AI: critical curriculum literacy and critical digital literacy.

Critical Curriculum Literacy

There is little doubt that educators are increasingly using online resources for curriculum materials, professional development, and educational support (Carpenter et al. 2022; Shelton et al. 2022). The scholarship in this area is unresolved as to whether this reflects elements of teacher leadership (Schroeder and Curcio 2022) or even educators’ agentic engagement in curriculum development. However, consistent throughout the discourse is that the use of these resources suggests a movement in curriculum development to more of a curatorial process, where educators are selecting resources rather than creating them (Harris et al. 2021; Sawyer et al. 2020; Schroeder and Curcio 2022). Schroeder and Curcio (2022), for example, developed critical curriculum literacy as a means of infusing curriculum decisions with knowledge of the “power relationships involved in the creation, selection, and promotion of resources” on online platforms and marketplaces (132). They suggest that critical curriculum literacy aids teachers in being able to “identify poor pedagogical practices and content inaccuracies” (138) and to more skillfully vet the materials they encounter online.

In the case of AI, critical curriculum literacy may be foundational in supporting teachers in asking questions about the quality of the responses generated by AI tools. As Holster (2024) acknowledged, “AI outputs are only as good as their inputs … and the output might not include every known aspect of a subject, limiting the scope of the platform’s knowledge” (6). With that limitation in mind, music educators might use critical frames already present in music education around repertoire selection (Allsup 2010), pedagogical approaches (Benedict 2010), and broader ideas around judgment and curricular choice. The result is a process in which teachers ask questions regarding whose perspectives informed the AI’s output, whose perspectives might be missing, and if these pedagogical recommendations align with their values in their teaching context. Critical curriculum literacy empowers educators and counteracts some of the algorithmic effects that might deskill or make them more passive.

Critical Digital and Data Literacy

Complementary to understandings and literacies regarding curriculum is the concept of critical digital literacy. Jones and Hafner (2021) suggest that critical digital literacy “is a conscious stance–a stance that puts you in the position to interrogate digital media, the texts that you encounter through them, and the institutions that produce, promote, or depend on them” (135). For music educators’ engagement with AI and other online platforms, this is where understanding how these tools work can inform engagement. For example, critical digital literacy might involve understanding that an AI platform algorithmically recommends solutions based on a dataset from various sources. Further, teachers might consider that the tool was built by a company with values and goals that inform how the product works, and that AI may provide responses that are of questionable accuracy and based on substandard resources (Holster 2024). Extending this conscious stance might further lead to questions of critical data literacy, a means for “people and communities to engage more critically with digital data and the ongoing datafication of everyday life” (Pangrazio and Selwyn 2023, 94). With critical data literacy music educators might ask questions regarding the information a platform has about them, if they post materials online how those materials might be subsumed by AI, and how these data ultimately shape their classrooms. Each literacy has the potential to inform choices at the classroom level that would potentially inform educators’ adoption of AI. In the following sections, I explore some of the potential challenges of AI and how critical literacies may support further engagement with this emerging technology in music teaching and learning contexts.

Teaching and Learning

AI has the potential to dramatically shape music teaching and learning and several practical and ethical issues are embedded in teachers’ AI adoption choices. The most pressing form of AI in much of music education AI discourse is the “large-language model” (LLM) (Cooper 2024; Holster 2024). These tools include products such as OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude. The models themselves are not “intelligent;” rather, as Holster (2024) explained, “these models … generate text that mimics human language by predicting the probability of a word given its context in a sentence. However, their intelligence is reflective of their training and not an inherent understanding or consciousness” (2). Understanding that these models are only as intelligent as the data on which they were trained is a central part of the critical literacy music educators require to evaluate AI’s usefulness in teaching and learning. In the following section, I explore and problematize the potential sources that LLMs draw on for music- and education-related responses.

Curriculum Materials, Edu-Influencers, and AI Training

The data LLMs use for training is presently one of the most heavily litigated topics in technology. These models require vast data sets and have coopted material from throughout the internet for their use, often without permission. For example, a recent New York Times article (Metz and Thompson 2024) documented how corporations building AI tools have scraped audio transcriptions of YouTube videos, user-generated text in Google Docs, the entirety of Wikipedia, and discussion posts from social media platforms like Reddit to power their products. As educators increasingly consult online sources and social media for professional development and curriculum work (Brewer and Rickels 2014; Koner and Eros 2019; Palmquist and Barnes 2015; Rickels and Brewer 2017), there is a potential for AI to amplify the influences of these platforms and the materials posted on them. A music educator who has developed critical curriculum literacy (Schroeder and Curcio 2022) would be specifically concerned with the sources informing that AI’s response. Yet LLMs often obscure or hide the sources and provenance of the recommendations they provide. Additionally, the training that informs LLM’s responses may be based on resources posted online with limited vetting or quality control. As an example of possible sources for AI tools, scholarship investigating online curricular marketplaces, and social media postings by educational influencers can be instructive.

Studies of online curriculum marketplaces foreground issues with unvetted pedagogical resources available online. Recent work shows that online marketplaces are a growing source of teachers’ curriculum materials (Kaufman et al. 2020) and platforms like TeachersPayTeachers.com offer thousands of curricular materials such as worksheets, activities, classroom decorations, and lesson plans. As these marketplaces grow, the quality of the materials sold through them becomes a particular concern for educators. The most recent study (Shelton et al. 2022) of the corpus of materials on TeachersPayTeachers.com for example, suggests that more than 600,000 new materials are posted each year, with roughly 1% of those being dedicated to music. The result is a substantial corpus of thousands of music-specific pedagogical resources of mixed quality (O’Leary and Bannerman 2025). Additionally, these platforms offer few features to aid teachers in evaluating the products available, and educators are responsible for determining if resources are useful and appropriate for their teaching contexts (Carpenter and Shelton 2022; Polikoff 2019; Shelton et al. 2022). Scholars’ evaluations of materials on online curriculum marketplaces suggest reasons for concern; as Aguilar et al. (2022) explain, “what proves to be popular and profitable [on these platforms] may not actually be beneficial to students” (8). Similarly, Silver (2022) explained that present scholarship suggests the materials on these sites are “low-quality resources” (456), and according to Polikoff (2019), “the majority of these materials are not worth using” (5). Yet these materials represent perhaps one of the largest repositories of online curriculum resources available, and scholars suggest that platforms like TeachersPayTeachers.com can shape curriculum discourse and “redefine what constitutes an education” (Shelton et al. 2022, 268). AI may further amplify that influence.

Concerns related to curriculum marketplaces extend further to online content generated on social media platforms. A growing community of teachers is building substantial followings and achieving levels of microcelebrity (Marwick 2015) through their efforts as “education influencers,” a type of social media influencer who gains renown through sharing teaching content through social media platforms (Carpenter et al. 2022; Shelton and Archambault 2019). The communities that education influencers develop are substantial and well- documented in music education scholarship examining music educators making a living teaching through online platforms (e.g. Baym 2018; Cayari 2011; O’Leary 2020, 2023). Yet the material they share comes with the same issues with resources from online curriculum marketplaces. Their work is not evaluated for quality, and online education influencers’ motivations may not be completely altruistic, as the potential for them to profit through their status presents a conflict of interest (Shelton et al. 2022). Further, like materials on curriculum marketplaces, online posts may be guided by platform-based metrics that privilege elements, such as watch time, views, and likes, over the quality of any pedagogical interaction (O’Leary 2023). To this point, Carpenter and Shelton (2022) further explained the conflict embedded in education influencers’ work on social media platforms, stating, “regardless of their motivations, education influencers are enacting a new kind of teacher leadership, and are doing so within the often flawed, commercialized social media platforms available to them” (12).

I suggest that AI results based on materials from online curriculum marketplaces and edu-influencer content present two potential dangers for music educators. First, the LLMs train on available data without evaluating the quality of the materials through any set of pedagogical values. If anything, the LLM relies on the commercialized platforms’ datafied metrics (O’Leary 2023; Van Dijck 2018) that show engagement through spurious curatorial tools (Carpenter and Shelton 2022). Without critical curriculum literacy and the opportunity to evaluate LLM’s sources, low-quality resources may proliferate as teachers enact teaching and learning recommendations provided by AI based on unreliable data. Second, the use of LLMs may weaken teachers’ opportunities to engage critically with curriculum development and in the process cede meaningful elements of their decision-making to tools not designed specifically for that purpose.

Influences on the Curricular Ecosystem

The literacies needed to engage with AI-influenced curriculum and pedagogy present a disruption to what Hodge et al. (2019) call the “ecology of the curriculum marketplace” (426) This ecology positions three entities interacting to form the curriculum economy, including curriculum suppliers, curriculum demanders, and demand influencers. Curriculum suppliers generate materials that are sold. Traditionally this might include book and music publishers and related industries. Curriculum demanders, such as teachers or school districts, purchase the materials, and demand influencers shape the materials that are produced and purchased. Social media platforms and online curriculum marketplaces lower the barriers to becoming a curriculum supplier. Anyone can post learning materials for sale through these platforms. There is no vetting, curation, or quality control (Shirky 2008). What oversight does exist is often limited to legal compliance and what Shelton et al. (2022) labeled as “moderation theater” (285). AI tools have the potential to aggregate and repackage these materials further and amplify the disruption by supplanting the curriculum suppliers. Educational influencers have opportunities to serve as demand influencers and shape teachers’ choices (Carpenter et al. 2022), yet AI tools may have the simultaneous effect of limiting the influence by supplanting the advisory role the influencers occupy and coopting the influencers’ materials through their models. The result is not only a corpus of training data available with clear issues of quality but also a situation where curriculum demanders have less clarity in evaluating recommendations.

Algorithmic Complications

With AI, algorithms take on an increasing role and authority in how teachers get information. Conceptually, algorithms are relatively simple; as Chayka (2024) explained, algorithms are “any formula or set of rules that produces a desired result” (10). LLMs use algorithms to generate responses to user queries through mathematical processes beyond most users’ understanding (Luitse and Denkena 2021). The complexity comes with an element of opaqueness that makes the process by which results are generated mysterious and at times confounding.

The opaqueness of algorithms is further complicated by their ubiquity. For example, a decade ago Thibeault (2014) posited that algorithms would be central elements of how we encounter new music and media, and his prediction was largely accurate. Algorithms have become central aspects of our lives online. The “For You” page on Instagram or TikTok, YouTube’s homepage, and recommendations by retailers like Amazon.com are all algorithmically produced. The ubiquity of algorithms is at least in part a function of their usefulness. For platforms, algorithms provide a means to encourage users’ further engagement (Burgess and Green 2018; Poell, Neiborg, and Duffy 2022), and users benefit by viewing recommendations that may align with their interests. However, the combination of ubiquity and a lack of opacity present ethical issues for music educators’ engagement with AI.

Algorithms, as they are used in AI tools like LLMs, obscure connections that were previously foundational to internet use. The core of this problem is structural. AI stands between people who create content online and those who would consume it. It performs the same matchmaking role as many platforms (Poell, Neiborg, and Duffy 2022), but instead of connecting users to content—and the people who created it—AI detaches and decontextualizes its responses. This is an extension of an ongoing broader phenomenon. In a 2024 presentation at the SXSW conference, Patreon CEO and musician Jack Conte explained how he has seen algorithms disrupt the relationship between artists and audiences. He suggested that the invention of the “follow” was vital to the development of cultural creation online. The act of following formed a relationship between the creator and the audience and allowed the creation of what Conte called “creator-led communities.” Conte charted a historical progression of content creation on the internet beginning when few could share content in the 1990s, Web 2.0 and the democratization of content creation online in the 2000s, the rise of the algorithmic feed and ranking content in the 2010s, and algorithms and platform interventions that could lead to “the death of the follower” in our present time. Conte goes on to explain that increasing algorithmic influence represents “the single most important problem facing creative people today is the weakening of creator-led communities, of our distribution channels to our fans. This is the hardest, most challenging, and most painful issue facing the present and future of creativity on the internet” (timestamp 25:21).

For educators, this poses the challenge of disconnecting people from the sources of the material that may influence their music teaching and learning decisions. The process makes the internet less personal and mechanizes the relationships people form through online engagement. For example, a person asking music performance questions to an AI may never connect with some of the thriving online music communities documented in prior scholarship (Cayari 2011; O’Leary 2022; Waldron 2018). They may also be less likely to follow a particular educator or influencer on social media so they can critically engage with their work or connect with a community of fellow educators.

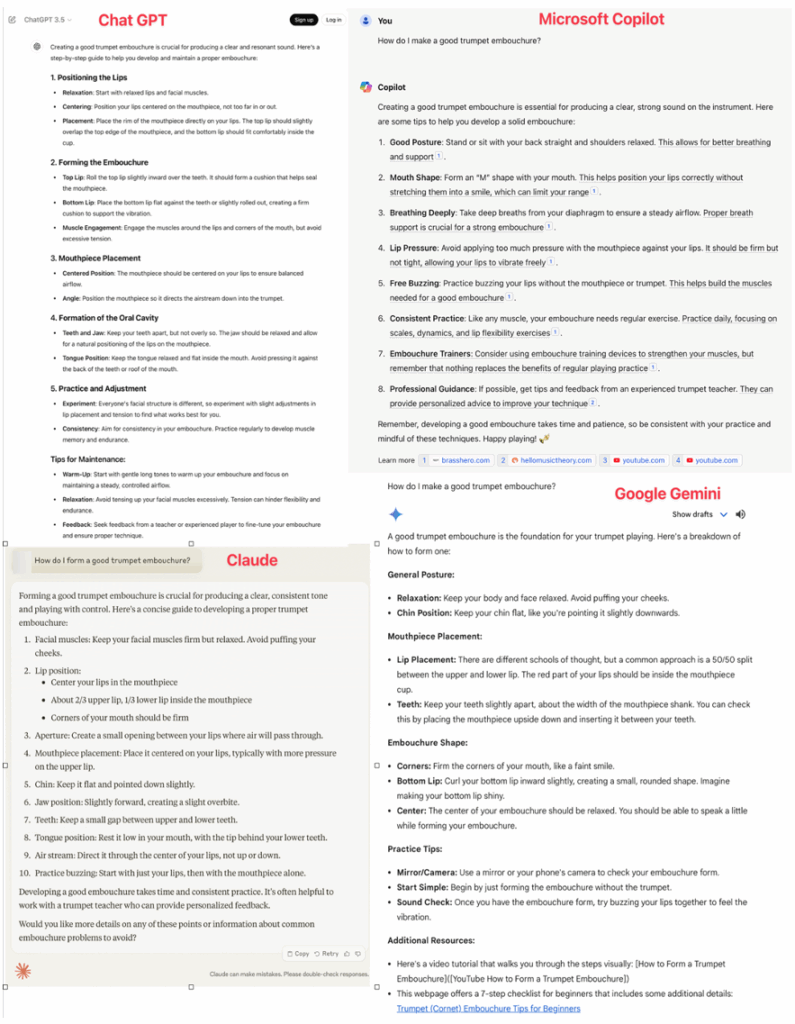

Examining the contrast between AI-generated responses and those offered by a search engine or platform is instructive. Below are responses to a music pedagogy question that I searched on YouTube and results to the same question from four AI Large Language Models (Google’s Gemini, Microsoft Copilot, ChatGPT by OpenAI, and Claude by Anthropic). The question was: “How do I make a good trumpet embouchure?” The LLM results, pictured below, are similar, but the degree to which they connect to other materials differs. Both Claude and ChatGPT provide answers with no clear links to where the responses came from. In contrast, Gemini and Copilot offered similar text-based answers, but at the bottom offered links to sources and connections where the learner could engage more with the content. Here AI has severed or weakened the connection between the learner and the creator. By aggregating the responses, no further engagement is needed from the user. I recognize that many would see this as a core feature of the tool, not a limitation, but if the responses were insufficient to the user, only two of the four LLMs offer a way to investigate further. The user is largely left to fend for themselves in vetting the responses, and notably, Claude even warns the user that its responses may be inaccurate and require verification.

This is a notable contrast to similar searches using a platform such as YouTube. Posing the same question on YouTube, I was shown a variety of possible videos to watch. YouTube offers some guidance by highlighting sections that might be relevant to my search, and I can at least employ some criticality in assessing the materials by comparing the results, learning about who made them through the information they share on their channels, and making a more informed choice about how to engage. If I found the material valuable, I might choose to subscribe to the channel and engage with more content from the same creator. Further connections to other content creators and channels are possible and facilitated in this exchange. While these results are still algorithmically created and not exempted from the biases and challenges associated with algorithmic curation (Gillespie 2014), there is at least an opportunity to apply critical literacies to the result.

Figure 1. Results from four large language models to the question “How do I make a good trumpet embouchure?” Models include ChatGPT (Top-left), Microsoft Copilot (Top-right), Claude (Bottom-left), and Google Gemini (Bottom-right).

Figure 1. Results from four large language models to the question “How do I make a good trumpet embouchure?” Models include ChatGPT (Top-left), Microsoft Copilot (Top-right), Claude (Bottom-left), and Google Gemini (Bottom-right).

In this case, AI’s efficiency is both a feature and a detriment. It enables access to quick information but prevents critical evaluation. AI aggregates data from multiple sources but disconnects the user from the creators of the material. While these issues are germane to the practical use of any AI tools, there are additional ethical considerations that should be a part of music educators’ decisions to use AI in their work.

Ethical Considerations

I speculate that there are two impending ethical issues embedded in educators’ use of AI in music teaching and learning. The first centers broadly around creative rights and the coopting of educational creators’ materials by AI corporations, and the second is related to the lack of opacity and embedded authority of these tools.

Embedded in the prior discussion of algorithms is the notion that LLMs, and the companies that operate them, profit by using the labor and products of others. As I discussed earlier in this paper, LLMs are only as good as the data on which they train. While educators should be concerned with the practical quality of the training material, they should also question the legality and creative rights involved with AI companies aggregating data in the first place. This is a central question that is yet unresolved in the industry. For the last year, technology news has been filled with reports of lawsuits between AI companies and the creators of the content that they may have used to train their models (Patel 2024). For example, the Recording Industry Association of America (RIAA), a trade group representing large music publishing and recording companies in the United States, recently sued two AI music startups that allow users to enter in prompts that create new pieces of music, many with remarkable similarities to existing copyrighted works (Pearson 2024). Central to the lawsuits, as Pearson (2024) explained, is the question “can AI firms simply take whatever they want, turn it into a product worth billions, and claim it was fair use” (para. 3)? Through their use of AI tools, music educators may find themselves embroiled in the overall ethical quandary of whether the results they get from AI are ethically produced and sourced.

While overall copyright and creative rights issues will need to be resolved through courts, legislation, and government regulation, in the intervening time music educators are left to navigate what this means in their contexts. This challenge is not necessarily new, as it extends prior frustration with copyright laws that educators have experienced (Thibeault 2012; Tobias 2015). Part of educators’ considerations and applications of critical digital literacy (Jones and Hafner 2021) should be the potential damage AI may cause to people who create online educational content. For example, technology bloggers Frederico Viticci and John Voorhees (2024) shared an open letter explaining how AI training has the potential to damage their business. Like Conte’s (2024) fears about the disruption of the relationship between content creator and the follower, Vittici and Voorhees (2024) explain, “we’re concerned that, this time, technology won’t open up new opportunities for creative people on the web. We fear that it’ll destroy them” (para. 8). They particularly lament that while search engines offer more of a “fair trade” as they send users to the creator’s website, AI companies, in contrast, “just took [the content].” To this point, Tim Berners-Lee (2024), credited by many with inventing the internet, discussed that AI companies and large platforms pose an existential threat to the web in that “the extent of power concentration … contradicts the decentralised spirit [of the internet] that I originally envisioned” (para. 3). Educators using these tools in their practice might similarly examine to what extent their engagement with AI could damage the work of educational creators, in the process weakening the potential knowledge base for pedagogical resources online.

The lack of opportunities to critically examine and evaluate AI responses and results is a central thread in this paper and a needed element of engagement with AI. This is of particular import because prior research (Gillespie 2014) suggests that algorithmic results in other contexts, like social media, operate with a type of “mechanical neutrality” (171), meaning there is a tendency for users to heed the product as authoritative and unbiased. The way AI separates recommendations and responses from the sources that created them has the potential to broaden this belief and amplify AI’s influence on a field. Further, AI algorithms have the potential to lessen agency and engagement with content. To this point, Chayka (2024), in a broad discussion of algorithmic influences, explained, “the more automated an algorithmic feed is, the more passive it makes us as consumers” (108). The result could be recommendations that are viewed as authoritative and are presented in ways that limit critical engagement. In effect, teachers could feel less of a need to engage critically since they imbue the mechanized results with authority.

Algorithmic authority then comes with two potential misunderstandings. First, algorithms are not neutral (Chayka 2024; Gillespie 2018; Selwyn 2019). To this point, Selwyn (2019) explains that since algorithms use rules constructed by people, educators should ask three critical questions: “(i) what rules are being followed? (ii) whose rules are they?, and (iii) what values and assumptions do they reflect? Like any rule-making process, algorithms do not magically fall out of the sky. Somebody somewhere sets down a series of complex coded instructions and protocols to be repeatedly followed” (92).

By recognizing that AI is a human creation, more potential for critique is available. It is like the type of criticality recommended by Apple (2014) who explained, “the curriculum is never simply a neutral assemblage of knowledge, somehow appearing in the texts of classrooms of a nation. It is always part of a selective tradition, someone’s selection, some group’s vision of legitimate knowledge” (22). These concerns align closely with Jones and Hafner’s (2021) notions of critical digital literacy, and educators’ use of AI should be built upon foundational critical evaluation. Unfortunately, the algorithmic and opaque nature of the tools may lead to more passivity than inquiry.

This leads to the second misunderstanding, while AI is frequently marketed as a tool of empowerment (Selwyn 2019, 2022), it may have the opposite effect. The extent to which educators trust recommendations from AI tools in their work, the more power and control they cede in the process. While authors of curriculum literature in music education consistently ask teachers to become more critical, evaluative, and aware of their choices (e. g., Allsup 2010; Benedict 2010), AI may do the opposite. Selwyn (2019) explained that the effect could be that AI tools “deskill and demean the teachers they are assisting” (123). The potential result is not teachers working more critically, but instead a decline in teachers’ engagement with thoughtful curriculum work.

Next Steps for AI and Music Education

Returning to Moore’s (2014) technology integration framework, it is plausible that AI has not yet “crossed the chasm” for music educators but continued and expanded use is likely. As AI further develops it is also possible that LLMs and related tools become increasingly integrated into devices and applications teachers and students already regularly use. For example, both Google and Apple have made AI integration central aspects of their newest mobile device operating systems. This integration may make adoption appear more continuous and potentially lessen the inertia needed to cross the chasm. While still recognizing that integration into teachers’ professional work typically is more challenging (O’Leary and Bannerman 2025), the increasing ubiquity of AI then makes the development of critical literacies more vital, and these literacies will not develop on their own. Prior literature on technology integration shows that critical and thoughtful engagement with technology is taught and developed, it does not occur simply through prolonged engagement (Bannerman and O’Leary 2021; Francom 2020; O’Leary and Bannerman 2025). Critical literacies will need to be cultivated intentionally and not occur simply through the use of, and exposure to, AI tools (Pangrazio and Selwyn 2023; Schroeder and Curcio 2022). Music educators’ data literacies should be a meaningful component of future professional development and curricular reforms in music teacher education.

Music educators are not powerless, and the critical literacies they develop may allow them to inform the policies and practices of their colleagues, schools, and school districts in AI integration. A first step might be to ask that the foundational element of transparency be demanded of any tool that is used in a classroom. To this point, Chayka (2024) explained: “The quickest way to change how digital platforms work may be to mandate transparency: forcing companies to explain how and when their algorithmic recommendations are working. Transparency would at least give users more information about the decisions constantly being made about what to show us. And if we know how algorithms work, perhaps we’ll be better able to resist their influence and make our own decisions” (195).

Without transparency, elements of critical evaluation are impossible in educational contexts (van Dijck et al. 2020). Sufficient transparency should be a prerequisite for any school-based engagement with AI. Teachers might demand from their educational leaders, administrators, and others that any tool that is adopted or suggested for use in their contexts aligns with basic standards for sound technology integration. For example, the International Society for Technology in Education (ISTE) standards for educators suggest that teachers should “mentor students in safe, legal, and ethical practices with digital tools and the protection of intellectual rights and property” (ISTE n.d., section 2.2c). By engaging in robust critical reflection and developing the needed literacies to inform that process, discussions of AI and its uses in the classroom may become powerful ways for schools and educators to model thoughtful technological engagement and consider the broader consequences and affordances of AI’s use in schools.

Conclusion

Through this paper, I have speculated on possible outcomes and considerations that might happen through music educators’ engagement with AI. Embedded in this discussion is a desire to combat the perceived inevitability and even powerlessness many feel related to the tools. The iPad advertisement referenced at the beginning of this article generated such a dramatic response partly because so many felt that huge corporations would continue to develop tools and products with increased potential to disrupt or even obviate their work. Yet this does not have to be the case. Music educators should begin posing questions and raising concerns because, as Selwyn (2022) explained, “the debate around AI in education needs to be approached in contestable terms—as a site of struggle and politics, rather than a neutral benign addition to classrooms” (626). Critical literacy and advocacy should be central components of the debate and the degree to which teachers can engage with technology integration in thoughtful and critical ways represents our best possible path forward.

About the Author

Emmett O’Leary is an assistant professor of music education in the School of Performing Arts at the Virginia Polytechnic Institute and State University in Blacksburg, Virginia. Prior to his work in Virginia, he served as an associate professor of music education at the Crane School of Music, SUNY-Potsdam and as an assistant band director at the University of Notre Dame. Emmett is a graduate of the University of Idaho (B.M. Music Education), Washington State University (M.A. Music), Indiana University Purdue University at Indianapolis (M.S. Music Technology), and Arizona State University (PhD Music Education). His research interests include competition in music education, instrumental music pedagogy, popular music pedagogy, media and platform studies, and music technology.

References

Aguilar, Stephen J, Dan Silver, and Morgan S. Polikoff. 2022. Analyzing 500,000 TeachersPayTeachers.com lesson descriptions shows focus on K-5 and lack of common core alignment. Computers and Education Open 3: 100081. https://doi.org/10.1016/j.caeo.2022.100081

Allsup, Randall E. 2010. Choosing music literature. Critical issues in music education, edited by Harold Abeles and Lori Custodero, 210 –35. Oxford University Press.

Apple, Michael. 2014. Official knowledge: Democratic education in a conservative age. 3rd ed. Routledge.

Bannerman, Julie, and Emmett O’Leary. 2021. Digital natives unplugged: Challenging assumptions of preservice music educators’ technological skills. Journal of Music Teacher Education 109 (2): 38–46. https://doi.org/10.1177/1057083720951462

Bauer, William I. 2013. The acquisition of musical technological pedagogical and content knowledge. Journal of Music Teacher Education 22 (2): 51–64. https://doi:10.1177/1057083712457881

Bauer, William I. 2014. Music learning and technology. New Directions 1. https://www.newdirectionsmsu.org/issue-1/bauer-music-learning-and-technology/

Bauer, William. I. 2020. Music learning today: Digital pedagogy for creating, performing, and responding to music. 2nd ed. Oxford University Press.

Baym, Nancy. 2018. Playing to the crowd. New York University Press.

Benedict, Cathy. 2010. Curriculum. Critical issues in music education, 2nd ed, edited by Harold Abeles and Lori Custodero, 149–74. Oxford University Press.

Berners-Lee, Tim. 2024. Marking the Web’s 35th Birthday: An open letter. Word Wide Web Foundation. https://webfoundation.org/2024/03/marking-the-webs-35th-birthday-an-open-letter

Brewer, Wesley D., and David A. Rickels. 2014. A content analysis of social media interactions in the Facebook band directors group. Bulletin of the Council for Research in Music Education 201: 7–22. https://doi.org/10.5406/bulcouresmusedu.201.0007

Burgess, Jean, and Joshua Green. 2018. YouTube: Online video and participatory culture. 2nd ed. Polity Press.

Carpenter, Jeffery P., and Catharyn C. Shelton. 2022. Educators’ perspectives on and motivations for using TeachersPayTeachers.com. Journal of Research on Technology in Education 56 (2): 218–32. https://doi.org/10.1080/1523.2022.2119452

Carpenter, Jeffery P., Catharyn C. Shelton, and Stephanie E. Schroeder. 2022. The education influencer: A new player in the educator professional landscape. Journal of Research on Technology in Education 55 (5): 749–64. https://doi.org/10.1080/15391523.2022.2030267

Cayari, Christopher. 2011. The YouTube effect: How YouTube has provided new ways to consume, create, and share music. International Journal of Education and the Arts 12 (6): 1–28.

Chayka, K. 2024. Filterworld: How algorithms flattened culture. Doubleday.

Conte, Jack. 2024, March 16. Death of the follower and the future of creativity on the web with Jack Conte | SXSW 2024 Keynote. YouTube video, 46:55. https://www.youtube.com/watch?v=5zUndMfMIncandt=1546s.

Cooper, Patrick K. 2024. Music teachers’ labeling accuracy and quality ratings of lesson plans by artificial intelligence (AI) and humans. International Journal of Music Education. Advance online publication. https://doi.org/10.1177/02557614241249163

Dammers, Richard J. 2010a. A case study of the creation of a technology-based music course. Bulletin of the Council for Research in Music Education 186: 55–65. https://doi.org/10.2307/41110434.

Dammers, Richard J. 2010b. A survey of technology-based music classes in New Jersey high schools. Contributions to Music Education 36 (2): 25–43.

Dammers, Richard. J. 2012. Technology-based music classes in high schools in the United States. Bulletin of the Council for Research in Music Education 194: 13–19. https://doi. org/10.5406/bulcouresmusedu.194.0073

Dorfman, Jay. 2015. Perceived importance of technology skills and conceptual understandings for pre-service, early- and late-career music teachers. College Music Symposium 55: 1–19. http://doi.org/10.18177/sym.2015.55.itm.10861

Dorfman, Jay. 2016. Exploring models of technology integration into music teacher preparation programs. Visions of Research in Music Education 28.

Dorfman, Jay. 2022. Theory and practice of technology-based music instruction. 2nd ed. Oxford University Press.

Elpus, Kenneth. 2020. Access to arts education in America: The availability of visual art, music, dance, and theater courses in U. S. high schools. Arts Education Policy Review 123 (2): 50–69. https://doi.org/10.1080/10632913.2020.1773365

Ertmer, Peggy A., Anne T. Ottenbreit-Leftwich, Olgun Sadik, Emine Sendurur, and Polat Sendurur. 2012. Teacher beliefs and technology integration practices: A critical relationship. Computers and Education 59 (2): 423–35. https://doi.org/10.1016/j.compedu.2012.02.001

Francom, Gregory. M. 2020. Barriers to technology integration: A time-series survey study. Journal of Research on Technology in Education 52 (1): 1–16. https://doi.org/10.1080/15391523.2019.1679055

Gillespie, Tarleton. 2014. The relevance of algorithms. In Media Technologies: Essays on Communication, Materiality, and Society, edited by Tarleton

Gillespie, Pablo J. Boczkowski, and Kirsten A. Foote, 167–95. MIT Press.

Gillespie, Tarleton. 2018. Regulation of and by platforms. In The Sage Handbook of Social Media, edited by Jean Burgess, Alice Marwick, and Thomas Poell, 254–78. Sage.

Haning, Marshall. 2016. Are they ready to teach with technology? An investigation of technology instruction in music teacher education programs. Journal of Music Teacher Education 25 (3): 78–90. https://doi.org/10.1177/1057083715577696

Harris, Lauren. M., Leanna Archambault, and Catharyn C. Shelton. 2021. Issues of quality on Teachers Pay Teachers: An exploration of best-selling U.S. history resources. Journal of Research on Technology in Education 55 (4): 608–27. https://doi.org/10.1080/15391523.2021.2014373

Hodge, Emily M., Serena J. Salloum, and Susanna L. Benko. 2019. The changing ecology of the curriculum marketplace in the era of the common core state standards. Journal of Educational Change 20 (4): 425–46. https://doi.org/10.1007/s10833-019-09347-1

Holster, Jacob. 2024. Augmenting music education through AI: Practical applications of ChatGPT. Music Educators Journal 110 (4): 36–42. https://doi.org/10.1177/00274321241255938

International Society for Technology in Education. n. d. ISTE standards: For educators. International Society for Technology in Education. https://iste.org/standards/educators

Jones, Rodney H., and Christoph A. Hafner. 2021. Understanding digital literacies: A practical introduction. 2nd Ed. Routledge.

Kaufman, Julia H., Sy Doan, Andrea Padro Tuma, Ashley Woo, Daniella Henry, and Rebecca Ann Lawrence. 2020. How instructional materials are used and supported in US K-12 classrooms. Research Report from RAND Corporation. https://www.rand.org/pubs/research_reports/RRA134-1.html

Koner, Karen, and John Eros. 2019. Professional development for the experienced music educator: A review of recent literature. Update: Applications of Research in Music Education 37 (3): 12–19. https://doi.org/10.1177/8755123318812426

Luitse, Dieuwertje, and Wiebki Denkena. 2021. The great transformer: Examining the role of large language models in the political economy of AI. Big Data & Society 8 (2). https://doi.org/10.1177/20539517211047734

Lopatto, Elizabeth. 2024, May 9. Apple doesn’t understand why you use technology. The Verge. https://www.theverge.com/2024/5/9/24152987/apple-crush-ad-piano-ipad

Marwick, Alice E. 2015. You may know me from YouTube: (Micro‐)celebrity in social media. In A companion to celebrity, edited by P. David Marshall and Sean Redmond, 333–50. Wiley.

Metz, Cade, and Stuart A. Thompson. 2024, April 6. What to know about tech companies using A.I. to teach their own A.I. The New York Times. https://www.nytimes.com/2024/04/06/technology/ai-data-tech-companies.html

Mishra, Punya, and Matthew J. Koehler. 2006. Technological pedagogical content knowledge: A new framework for teacher knowledge. Teachers College Record 108 (6): 1017–54. https://doi.org/10.1111/j.1467-9620.2006.00684.x

Moore, Geoffrey A. 2014. Crossing the chasm: Marketing and selling disruptive products to mainstream consumers. 3rd ed. HarperCollins.

Morozov, Evgeny. 2013. To save everything, click here: The folly of technological solutionism. Public Affairs.

O’Leary, Emmett. 2020. The ukulele and YouTube: A content analysis of seven prominent YouTube ukulele channels. Journal of Popular Music Education 4 (2): 175–91. https://doi.org/10.31386/jpme_0024_1

O’Leary, Emmett. 2022. The quarantine ukulele live streams: The creativities of an online music community during a global health crisis. In The Routledge companion to creativities in music education, edited by Clint Randles and Pamela Burnard, 341–52. Routledge. https://doi.org/10.4324/9781003248194-34

O’Leary, Emmett. 2023. Music education on YouTube and the challenges of platformization. Action, Criticism, and Theory for Music Education 22 (4): 14–43. https://doi.org/10.22176/act22.4.14

O’Leary, Emmett, and Julie Bannerman. 2023. Technology integration in music education and the COVID-19 Pandemic. Bulletin of the Council for Research in Music Education 238: 23–40. https://doi.org/10.5406/21627223.238.02

O’Leary, Emmett, and Julie Bannerman. 2025. Online curriculum marketplaces and music education: A critical analysis of music activities on TeachersPayTeachers.com. International Journal of Music Education. Advance online publication. https://doi.org/10.1177/20557614241307242

Palmquist, Jane E., and Gail V. Barnes. 2015. Participation in the school orchestra and string teachers Facebook v2 group: An online community of practice. International Journal of Community Music 8 (1): 93–103. https://doi.org/10.1386/ijcm.8.1.93_1

Pangrazio, Luca, and Neil Selwyn. 2023. Critical data literacies: Rethinking data and everyday life. MIT Press.

Patel, Nilay. 2024, February 15. How AI copyright lawsuits could make the whole industry go extinct. The Verge. https://www.theverge.com/24062159/ai-copyright-fair-use-lawsuits-new-york-times-openai-chatgpt-decoder-podcast

Pearson, Jordan. 2024, June 26. The RIAA versus AI, explained. The Verge. https://www.theverge.com/24186085/riaa-lawsuits-udio-suno-copyright-fair-use-music

Poell, Thomas, David Neiborg, and Brooke E. Duffy. 2022. Platforms and cultural production. Polity Press.

Polikoff, Morgan. 2019. The Supplemental Curriculum Bazaar: Is what’s online any good? Research report from Thomas B. Fordham Institute. https://fordhaminstitute.org/national/research/supplemental-curriculum-bazaar

Puentadura, Ruben. 2010. SAMR and TPACK: Intro to advanced practice. Hippasus. http://hippasus.com/resources/sweden2010/SAMR_TPCK_IntroToAdvancedPractice.pdf

Rickels, David A., and Wesley B. Brewer. 2017. Facebook Band Director’s Group: Member usage behaviors and perceived satisfaction for meeting professional development needs. Journal of Music Teacher Education 26 (3): 77–92. https://doi.org/10.1177/1057083717692380

Rohwer, Debbie. 2023. Research-to-Resource: ChatGPT as a tool in music education research. 1–4. Update: Applications of Research in Music Education 42 (3): 4–7. https://doi.org/10.1177/87551233231210875

Ruthmann, S. Alex, Evan Tobias, Clint Randles, and Matthew Thibeault. 2015. Is it the technology? Challenging technological determinism in music education. In Music education: Navigating the future, edited by Clint Randles, 122–38. Routledge.

Sawyer, Amanda G., Katie Dredger, Joy Myers, Susan Barnes, Reece Wilson, Jesse Sullivan, and Daniel Sawyer. 2020. Developing teachers as critical curators: Investigating elementary preservice teachers’ inspirations for lesson planning. Journal of Teacher Education 71 (5): 518–36. https://doi.org/10.1177/0022487119879894

Schroeder, Stephanie, and Rachelle Curcio. 2022. Critiquing, curating, and adapting: Cultivating 21st-century critical curriculum literacy with teacher candidates. Journal of Teacher Education 73 (2): 129–44. https://doi.org/10.1177/00224871221075279

Selwyn, Neil. 2019. Should robots replace teachers? Polity Press.

Selwyn, Neil. 2022. The future of AI and education: Some cautionary notes. European Journal of Education. 57: 620–31. https://doi.org/10.1111/ejed.12532

Shaw, Brian. 2024. Artificial intelligence and assessment: Three implications for music educators. Music Educators Journal 111 (2): 19–25. https://doi.org/10.1177/00274321241296118

Shelton, Catharyn C., and Leanna M. Archambault. 2019. Who are online teacherpreneurs and what do they do? A survey of content creators on TeachersPayTeachers.com. Journal of Research on Technology in Education 51 (4): 398–414. https://doi.org/10.1080/15391523.2019.1666757

Shelton, Catharyn C., Matthew J. Koehler, Spencer P. Greenhalgh, and Jeffery P. Carpenter. 2022. Lifting the veil on TeachersPayTeachers.com: An investigation of educational marketplace offerings and downloads. Learning, Media and Technology 47 (2): 268–87. https://doi.org/10.1080/17439884.2021.1961148

Shirky, Clay. 2008. Here comes everybody: The power of organizing without organizations. Penguin Books.

Shulman, Lee. 1986. Those who understand: Knowledge growth in teaching. Educational Researcher 15 (2): 4–14. https://doi.org/10.3102/0013189X015002004

Silver, Daniel. 2022. A theoretical framework for studying teachers’ curriculum supplementation. Review of Educational Research 92 (3): 455–89. https://doi.org/10.3102/00346543211063930

Spangler, Todd. 2024, May 29. Why Apple’s iPad ad fell flat: Company failed to understand it conjured fears of ‘tech kind of destroying humanity’: Variety. https://variety.com/2024/digital/news/why-apple-ipad-ad-controversy-backlash-1236017855/

Testa, Michael A. 2021. Music technology in the classroom (Publication No. 28648550). PhD diss, University of Massachusetts Lowell. ProQuest Dissertations and Theses Global.

Thibeault, Matthew D. 2012. From compliance to creative rights: Rethinking intellectual property in the age of new media. Music Education Research 14 (1): 103–17. https://doi.org/10.1080/14613808.2012.657165

Thibeault, Matthew D. 2014. Algorithms and the future of music education: A response to Shuler. Arts Education Policy Review 115 (1): 19–25. https://doi.org/10.1080/10632913.2014.847355

Tobias, Evan S. 2015. Participatory and digital cultures in practice: Perspectives and possibilities in a graduate music course. International Journal of Community Music 8 (1): 7–26. https://doi.org/10.1386/ijcm.8.1.7_1

Tondeur, Jo, Johan van Braak, Peggy A. Ertmer, and Anne Ottenbreit-Leftwich. 2017. Understanding the relationship between teachers’ pedagogical beliefs and technology use in education: A systematic review of qualitative evidence. Educational Technology Research and Development 65 (3): 555–75. https://doi.org/10.1007/s11423-016-9481-2

Tyack, David, and Larry Cuban. 1995. Tinkering toward utopia: A century of public school reform. Harvard University Press.

Van Dijck, José, Thomas Poell, and Martjin de Waal. 2018. The platform society. Oxford University Press.

Voorhees, John and Frederico Viticci. 2024, July 2. AI companies need to be regulated: An open letter to the U.S. Congress and European Parliament. MacStories. https://www.macstories.net/stories/ai-companies-need-to-be-regulated-an-open-letter-to-the-u-s-congress-and-european-parliament/

Waldron, Janice L. 2018. Questioning 20th century assumptions about 21st century music practices. Action, Criticism, and Theory for Music Education 17 (3): 97–113. https://doi.org/10.22176/act17.1.97